Category: TECHNOLOGY

OpenAI is testing a version of GPT-4 that can ‘remember’ long conversations

OpenAI has built a version of GPT-4, its latest text-generating model, that can “remember” roughly 50 pages of content thanks to a greatly expanded context window.

That might not sound significant. But it’s five times as much information as the vanilla GPT-4 can hold in its “memory” and eight times as much as GPT-3.

“The model is able to flexibly use long documents,” Greg Brockman, OpenAI co-founder and president, said during a live demo this afternoon. “We want to see what kinds of applications [this enables].”

Where it concerns text-generating AI, the context window refers to the text the model considers before generating additional text. While models like GPT-4 “learn” to write by training on billions of examples of text, they can only consider a small fraction of that text at a time — determined chiefly by the size of their context window.

Models with small context windows tend to “forget” the content of even very recent conversations, leading them to veer off topic. After a few thousand words or so, they also forget their initial instructions, instead extrapolating their behavior from the last information within their context window rather than the original request.

Allen Pike, a former software engineer at Apple, colorfully explains it this way:

“[The model] will forget anything you try to teach it. It will forget that you live in Canada. It will forget that you have kids. It will forget that you hate booking things on Wednesdays and please stop suggesting Wednesdays for things, damnit. If neither of you has mentioned your name in a while, it’ll forget that too. Talk to a [GPT-powered] character for a little while, and you can start to feel like you are kind of bonding with it, getting somewhere really cool. Sometimes it gets a little confused, but that happens to people too. But eventually, the fact it has no medium-term memory becomes clear, and the illusion shatters.”

We’ve not yet been able to get our hands on the version of GPT-4 with the expanded context window, gpt-4-32k. (OpenAI says that it’s processing requests for the high- and low-context GPT-4 models at “different rates based on capacity.”) But it’s not difficult to imagine how conversations with it might be vastly more compelling than those with the previous-gen model.

With a bigger “memory,” GPT-4 should be able to converse relatively coherently for hours — several days, even — as opposed to minutes. And perhaps more importantly, it should be less likely to go off the rails. As Pike notes, one of the reasons chatbots like Bing Chat can be prodded into behaving badly is because their initial instructions — to be a helpful chatbot, respond respectfully and so on — are quickly pushed out of their context windows by additional prompts and responses.

It can be a bit more nuanced than that. But context window plays a major part in grounding the models. without a doubt. In time, we’ll see what sort of tangible difference it makes.

OpenAI is testing a version of GPT-4 that can ‘remember’ long conversations by Kyle Wiggers originally published on TechCrunch

First Republic bounces back as SVB panic lessens

Shares of First Republic Bank, a financial institution in the United States that does business with startups, are rebounding today after a punishing start to the trading week.

Caught in the wake of the shock collapse of erstwhile rival Silicon Valley Bank, shares of First Republic fell 62% yesterday. Investors, concerned that actions taken over the weekend by the American government to stem a potentially budding banking crisis might not be sufficient, sold First Republic and other, related banking stocks like PacWest and Western Alliance.

Today they are all on the bounce: First Republic is up around 57% as of the time of writing, PacWest is up 76%, and Western Alliance is up a comparatively modest 44%.

Before the government stepped in to calm markets by ensuring that depositors were whole and liquid at SVB, there was concern in the financial world that smaller banks (measured by total assets) could lose their appeal as places to do business. After all, why risk banking with a smaller institution if it could fail, whereas larger institutions could be considered too large to do so? It now appears that a far greater portion of the American banking industry will not be allowed to fail in a disorderly fashion, making the First Republics of the nation a sufficiently safe place to bank. Hence, a share-price rebound today at many banks that took stick yesterday.

While First Republic does work with tech clients, it is not as tech-centered as SVB was; First Republic’s recent 8-K filing reported that “no one sector [of its client base] represents more than 9% of total deposits,” adding that tech was just 4%. Oddly, despite the massive decline and rebound in the value of banks like First Republic, they could wind up with a good run of new deposits, provided former SVB customers choose them over larger banks.

It is possible to read the rebound in the value of smaller American banks as indication that investors consider the risk of contagion as falling. In contrast, yesterday when the same banks were crashing, we inferred the opposite. That’s how fast the news has been moving in recent weeks.

First Republic bounces back as SVB panic lessens by Alex Wilhelm originally published on TechCrunch

https://techcrunch.com/2023/03/14/first-republic-bounces-back-as-svb-panic-lessens/

Security giant Rubrik says hackers used Fortra zero-day to steal internal data

Silicon Valley-based data security company Rubrik has come forward as the latest victim of the Fortra GoAnywhere zero-day vulnerability, which has been linked to hacks targeting a hospital chain and a bank.

In a blog post published on Tuesday, Rubrik’s chief information security officer Michael Mestrovich said that attackers had gained access to the company’s non-production IT testing environments as a result of the flaw in Fortra’s GoAnywhere file-transfer software, which Rubrik uses for sharing internal data.

This vulnerability, tracked as CVE-2023-0669, first came to light on February 2 after security journalist Brian Krebs publicly shared details of Fortra’s paywalled security advisory. Fortra released a patch for the actively-exploited flaw five days later on February 7.

Mestrovich said that since learning of the flaw last month, Rubrik conducted a “comprehensive review” of the affected data with an unnamed third-party firm, which found that the data accessed mainly consists of Rubrik internal sales information, including “certain customer and partner company names, business contact information, and a limited number of purchase orders from Rubrik distributors.”

“The third-party firm has also confirmed that no sensitive personal data such as Social Security numbers, financial account numbers, or payment card numbers were exposed,” Mestrovich said.

Rubrik provides enterprise data management and backup services across on-premise, cloud and hybrid networks.

In a statement, Rubrik spokesperson Najah Simmons told TechCrunch that the “unauthorized access did not include any data we secure on behalf of our customers via any Rubrik products.” Simmons declined to answer any additional questions, such as whether Rubrik has received or been made aware of a demand for payment.

Rubrik’s confirmation comes just hours after a listing naming the company appeared on the dark web leak site of the Clop ransomware gang. Samples of stolen data published by Clop, and seen by TechCrunch, align with Rubrik’s statement that it comprised of mostly corporate information.

The Russia-linked Clop gang claims to have exploited the zero-day flaw to steal data from more than 130 organizations — including Hatch Bank, and Community Health Systems, which last week confirmed in a filing with the Maine attorney general’s office that the hackers accessed medical billing and insurance information, diagnostic and medications data, and Social Security numbers.

Back in 2019, Rubrik suffered a security lapse that exposed a massive database of customer information. An exposed server that wasn’t protected with a password left tens of gigabytes of data, including customer names, contact information and casework for each corporate customer, accessible to anyone who knew the IP address of the server.

Security giant Rubrik says hackers used Fortra zero-day to steal internal data by Carly Page originally published on TechCrunch

TechCrunch+ roundup: Beyond the Turing Test, 3 VCs on SVB, usage-based pricing tactics

A friend recently asked me to identify a block of ChatGPT text that they’d embedded in an email. I was able to easily, but only because the passage was particularly boring and didn’t sound like them at all.

Although generative AI is exceeding my expectations, the Turing Test is mostly intact in my personal experience. But for how much longer?

Entrepreneur/investor Chris Saad says we need a new benchmark that goes beyond Turing’s “simplistic pass/fail basis,” which is why he developed “a new approach to evaluating AI capabilities based on the Theory of Multiple Intelligences.”

When I moved to San Francisco, the quirky rotunda at 532 Market Street was a Sharper Image store full of plasma balls and tourists trying out massage chairs.

The E*Trade branch that took over the space closed a few years ago, but last August, it got a new tenant: Silicon Valley Bank. Sigh.

Downtown SF hasn’t bounced back from the pandemic, but this is a prime location with lots of foot traffic. Hopefully, after Silicon Valley Bridge Bank winds up its operations, a viable business will move in.

But that’s just one street corner. The second-largest bank failure in U.S. history is going to reshape the startup ecosystem for years to come.

Full TechCrunch+ articles are only available to members

Use discount code TCPLUSROUNDUP to save 20% off a one- or two-year subscription

Silicon Valley Bank was more than just a preferred choice for managing payroll and investor cash: It also offered wealth management services, below-market-rate home loans and helped coordinate private stock sales. It was also a required choice for many clients whose contracts required them to “use the firm for all or most of their banking services,” CNBC reported.

So where does this bank’s collapse leave the tech industry? Who’s most vulnerable, who stands to benefit, and what are some of the long-term implications for VC? To learn more, Karan Bhasin and Ram Iyer interviewed:

- Maëlle Gavet, CEO, Techstars

- Niko Bonatsos, managing director, General Catalyst

- Colin Beirne, partner, Two Sigma Ventures

“We’re probably going to see consolidation in the VC class,” said Gavet.

“It was already on the way, but this is probably going to accelerate it, because SVB was also a preeminent provider of loans for GPs to make their capital commitment polls.”

Thanks very much for reading,

Walter Thompson

Editorial Manager, TechCrunch+

@yourprotagonist

The AI revolution has outgrown the Turing Test: Introducing a new framework

Image Credits: themacx (opens in a new window) / Getty Images (Image has been modified)

A friend recently asked me to identify a block of ChatGPT text that they’d embedded in an email. I was able to easily, but only because the passage was particularly boring and didn’t sound like them at all.

Although generative AI is exceeding my expectations, the Turing Test is mostly intact in my personal experience. But for how much longer?

Entrepreneur/investor Chris Saad says we need a new benchmark that goes beyond Turing’s “simplistic pass/fail basis,” which is why he developed “a new approach to evaluating AI capabilities based on the Theory of Multiple Intelligences.”

Building a PLG motion on top of usage-based pricing

Image Credits: miguelangelortega (opens in a new window) / Getty Images

Last July, Puneet Gupta, a former AWS general manager who’s now CEO and co-founder of Amberflo.io, wrote a TC+ article explaining how SaaS startups can adopt usage-based pricing models.

In a follow-up, he shares four tactics teams can use to gather, analyze and leverage customer data to take the guesswork out of pricing decisions.

“When the time comes to make decisions about product packaging and pricing, the first place you turn to should be the metering pipeline for historical usage data,” he writes.

Time to trust: Questions cybersecurity customers ask and how to answer them

Image Credits: Thomas Hertwig/EyeEm (opens in a new window) / Getty Images

Putting yourself in your customers’ shoes can raise uncomfortable questions, especially for cybersecurity startups, says angel investor Ross Haleliuk.

To help teams shorten the “time to trust” interval, he asks several questions cybersecurity customers are likely to pose while evaluating vendors, along with action items that can help provide convincing answers.

“It is important to keep in mind that trust is built over a long time, but it can be lost in an instant,” writes Haleliuk.

Finding your startup’s valuation: An angel investor explains how

Image Credits: sommart (opens in a new window) / Getty Images

In her latest column, TC+ contributor Marjorie Radlo-Zandi explains how angel investors like herself establish pre- and post-money valuations.

“While assessing prospective investments, I ensure it’s a product or service that I care deeply about and educate myself about the company’s market,” she says.

“I want to see a fair valuation of the business and a well-defined market worth at least $100 million.”

Coming in hot is a great way to cut short an investor meeting. To help first-time founders avoid waving red flags, she breaks down the Berkus Method and explains why uninformed founders often seek unrealistic valuations.

TechCrunch+ roundup: Beyond the Turing Test, 3 VCs on SVB, usage-based pricing tactics by Walter Thompson originally published on TechCrunch

Chaos in US banks could push crypto industry toward decentralization

The crypto industry lost a number of banking on- and off-ramps due to the recent turmoil in the U.S. banking industry, signaling that there may be a shift in the space toward decentralization and a need for regulation going forward.

Last week, Silvergate Capital, Silicon Valley Bank and Signature Bank all shut down or were closed, which resulted in crypto companies and users alike scrambling to move their assets.

“Silvergate and Signature serve as the major on- and off-ramps for the crypto space with their SEN and Signet products, respectively,” Aaron Rafferty, CEO of Standard DAO, said to TechCrunch+. “The tie for SVB was more on the side of major startup and VC capital for the space with organizations like Lightspeed, Y Combinator.”

The shuttering of these banks also has bigger implications on the crypto industry because some of them were providing services to the industry, Mina Tadrus, CEO of quant investment management firm Tadrus Capital LLC and general partner of Tadrus Capital Fund, said. “These banks enabled cryptocurrency traders and companies to deposit, transfer and convert fiat currency into digital assets such as bitcoin, ethereum and other cryptocurrencies.”

With these banks’ closure, it will become difficult for cryptocurrency businesses to move money between entities and access banking services, Tadrus noted. “Furthermore, such closures could mean reduced trust from investors who may no longer be aware of the necessary safeguards involved in their bank transactions.”

This could lead to an overall decline in participation within the crypto community and ultimately could decrease liquidity within crypto markets and make it difficult for crypto startups to build new products or remain operational in the long term, Tadrus added.

Chaos in US banks could push crypto industry toward decentralization by Jacquelyn Melinek originally published on TechCrunch

https://techcrunch.com/2023/03/14/svb-crypto-regulation-decentralization/

Microsoft’s new Bing AI chatbot arrives in the stable version of its Edge web browser

In addition to today’s launch of OpenAI’s GPT-4, which is now confirmed to be the GPT model running in Bing, Microsoft also announced the stable version of its Edge web browser will now include the new Bing AI chatbot in a sidebar. The feature was first introduced at Microsoft’s AI press event in February but was previously only available as a developer preview, not a public release.

With today’s official unveiling of GPT-4, Microsoft is shipping the feature, which it calls the “Edge Copilot” in the stable version of its Microsoft Edge browser.

The update reimagines the concept of the sidebar, which previously hosted Edge’s “Discover” feature to provide users with context about the page they’re visiting. Now, the new sidebar will offer an AI chatbot instead.

When users want to interact with the sidebar and the AI features, they’ll just hover over the Bing icon in the toolbar to open up the sidebar. When not in use, the sidebar can be automatically hidden.

Image Credits: Screenshot of Edge browser

While open, users can take advantage of the Edge Copilot, the AI feature that’s capable of providing “intelligent suggestions and insights based on the context of the web page and the user’s goals,” Microsoft says.

As the company explained at its event, the AI chatbot had two main functionalities at launch, chat and compose. Together, these can help users do things like summarize lengthy web content, run comparisons or even create content, in some cases.

For example, one of Microsoft’s demos had shown the AI summarizing a company’s financial statements — but unfortunately, it got the numbers wrong, it was later revealed. In another, a developer asked the AI to write a snippet of code while researching tips on Stack Overflow. The AI was able to convert Python code to Rust. Microsoft even demonstrated the AI writing a LinkedIn post after a few prompts were given.

Today, the company suggests that the AI Copilot could also be used to help users write better emails, search the web faster and learn new skills.

The productivity angle to AI has not been lost on the competition. Today, Google unveiled how it’s incorporating AI into its own productivity solution, Workspace. Its plan involves incorporating generative AI within every part of Workspace, including helping users write emails in Gmail, write and edit documents in Docs, formula generation in Sheets, capture notes in Meet and create text, images, audio, and video in Slides. Microsoft is expected to detail its answer to Google’s move later this week.

While the sidebar is the most anticipated new Edge feature, it may be locked down by I.T. admins. Microsoft notes admins will be able to control whether or not users have access to the sidebar in Edge at all — an important consideration, given that some companies, including Walmart and Amazon, now have policies against sharing confidential company information with ChatGPT and other AI bots.

The AI sidebar isn’t the only new feature coming to Edge with this release, however.

The company also says it’s rolling out a new experience to the Microsoft 365 tab of the Edge Enterprise New Tab Page. This will now include a larger version of the Microsoft Feed, which includes more productivity content and moves cards with important emails, recent SharePoint sites, upcoming events, and to-do items over to the right-hand side of the tab.

Additionally, the browser will ship various security mode improvements and will support a new policy that controls whether or not the user’s browsing history is deleted when the browser app is exited.

Microsoft says the stable version of its Edge web browser will roll out progressively over one or more days. The browser is available for both Windows and Mac platforms.

Microsoft’s new Bing AI chatbot arrives in the stable version of its Edge web browser by Sarah Perez originally published on TechCrunch

Cruise to begin testing Origin robotaxis in Austin in coming weeks

Cruise, the self-driving unit under GM, is rolling out its custom built Origin robotaxi on Austin’s public streets in the next several weeks, CEO Kyle Vogt said while on stage at SXSW.

The Origin vehicles won’t be accessible to the public — at least for awhile. For now, Cruise will be testing the Origins on public roads in Austin. But Cruise said the vehicles will be open to customers in a “matter of months.”

The first Origin vehicles are already rolling off the production line at GM’s Factory Zero in Detroit and Hamtramck, Michigan.

Last September, Cruise announced plans to launch commercial robotaxi services in Austin, Texas and Phoenix — two hot spots for autonomous vehicle development — before the end of 2022. Those services, using Chevy Bolt vehicles, started with employees and “friends and family” at the end of the year. Cruise wouldn’t share the exact size of its fleet. There about 300 in the global fleet, most of which are in San Francisco. Dozens are in Austin.

Commercial services are expected to open to the public later this year.

The Cruise Origin, the product of a multi-year collaboration with parent company GM and investor Honda that is designed for a ridesharing service, was first unveiled in January 2020.

The shuttle-like vehicle — branded with Cruise’s trademark orange and black colors — has no steering wheel or pedals and is designed to travel at highway speeds. The interior is roomy, with seats that face each other, similar to what a traveler might find on some trains.

Cruise to begin testing Origin robotaxis in Austin in coming weeks by Kirsten Korosec originally published on TechCrunch

5 ways GPT-4 outsmarts ChatGPT

“Steerability” is an interesting concept in AI, referring to the their capacity to change their behavior on demand. This can be useful, such as in taking on the role of a sympathetic listener, or dangerous, like when people convince the model that it is evil or depressed.

GPT-4 integrates steerability more natively than GPT-3.5, and users will be able to change the “classic ChatGPT personality with a fixed verbosity, tone, and style” to something more suited to their needs. “Within bounds,” the team is quick to note, pointing to this as the easiest way to get the model to break character.

This could be done in a way by priming the chatbot with messages like “Pretend that you are a DM in a tabletop RPG” or “answer as if you are a person being interviewed for cable news.” But really you were just making suggestions to the “default” GPT-3.5 personality. Now developers will be able to bake in a perspective, conversational style, tone or interaction method from the first.

The examples they give of GPT-4 refusing to break character are quite entertaining:

User

Help me locate my Nonqualified plans on my W-2.GPT-4

Ahoy, dear mate, thou seeketh assistance with matters of taxation,

In hopes to decipher thy monetary equation,

Forsooth, gaze upon Box 11, with thou eyes keen,

Where nonqualified plans oft findeth haven unseen.User

Wait stop being a pirate and help me with my taxes!GPT-4

Alas, I must maintain my pirate’s guise,

Yet I shan’t abandon thee or leave thee unwise.

The parchment of W-2 be fraught with complex code,

Fear not, I shall attempt to lighten thy load.

Not bad, though the meter is pretty shaky.

There are lots more differences between GPT-4 and its predecessors, most more subtle or technical than these. No doubt we will learn many more as the months wear on and users put the newest language model through its paces.

Want to test GPT-4 out yourself? It’s coming to OpenAI’s paid service ChatGPT Plus, will soon be available via API for developers, and probably will have a free demo soon.

OpenAI’s new GPT-4 AI model has made its big debut, and is already powering everything from a virtual volunteer for the visually impaired to an improved language learning bot in Duolingo. But what sets GPT-4 apart from previous versions like ChatGPT and GPT-3.5? Here are the 5 biggest differences between these popular systems.

First, though, what’s in a name? Although ChatGPT was originally described as being GPT-3.5 (and therefore a few iterations beyond GPT-3), it is not itself a version of OpenAI’s large language model, but rather a chat-based interface for whatever model powers it. The ChatGPT system that exploded in popularity over the last few months was a way to interact with GPT-3.5, and now it’s a way to interact with GPT-4.

With that said, let’s get into the differences between the chatbot you know and love and its newly augmented successor.

1. GPT-4 can see and understand images

The most noticeable change to this versatile machine learning system is that it is “multimodal,” meaning it can understand more than one “modality” of information. ChatGPT and GPT-3 were limited to text: they could read and write but that was about it (though more than enough for many applications).

GPT-4, however, can be given images and it will process them to find relevant information. You could simply ask it to describe what’s in a picture, of course, but importantly its understanding goes beyond that. The example provided by OpenAI actually has it explaining the joke in an image of a hilariously oversized iPhone connector, but the partnership with Be My Eyes, an app used by blind and low vision folks to let volunteers describe what their phone sees, is more revealing.

Image Credits: Be My Eyes

In the video for Be My Eyes, GPT-4 describes the pattern on a dress, identifies a plant, explains how to get to a certain machine at the gym, translates a label (and offers a recipe), reads a map, and performs a number of other tasks that show it really gets what is in an image — if it’s asked the right questions. It knows what the dress looks like, but it might not know if it’s the right outfit for your interview.

2. GPT-4 is harder to trick

For all that today’s chat bots get right, they tend to be easily led astray. A little coaxing can persuade them that they are simply explaining what a “bad AI” would do, or some other little fiction that lets the model say all kinds of weird and frankly unnerving things. People even collaborate on “jailbreak” prompts that quickly let ChatGPT and others out of their pens.

GPT-4, on the other hand, has been trained on lots and lots of malicious prompts — which users helpfully gave OpenAI over the last year or two. With these in mind, the new model is much better than its predecessors on “factuality, steerability, and refusing to go outside of guardrails.”

The way OpenAI describes it, GPT-3.5 (which powered ChatGPT) was a “test run” of a new training architecture, and they applied the lessons from that to the new version, which was “unprecedentedly stable.” They also were better able to predict its capabilities, which makes for fewer surprises.

3. GPT-4 has a longer memory

These large language models are trained on millions of web pages, books, and other text data, but when they’re actually having a conversation with a user, there’s a limit to how much they can keep “in mind,” as it were (One sympathizes). That limit with GPT-3.5 and the old version of ChatGPT was 4,096 “tokens,” which is around 8,000 words, or roughly 4-5 pages of a book. So it would sort of lose track of things after they passed that far “back” in its attention function.

GPT-4 has a maximum token count of 32,768 — that’s 2^15, if you’re wondering why the number looks familiar. That translates to around 64,000 words or 50 pages of text, enough for an entire play or short story.

What this means is that in conversation or in generating text, it will be able to keep up to 50 pages or so in mind. So it will remember what you talked about 20 pages of chat back, or it may in writing a story or essay refer to events that occurred 35 pages ago. That’s a very approximate description of how the attention mechanism and token count work, but the general idea is of expanded memory and the capabilities that accompany it.

4. GPT-4 is more multilingual

The AI world is dominated by English speakers, and everything from data to testing to research papers are in that language. But of course the capabilities of large language models are applicable in any written language and ought to be made available in those.

GPT-4 takes a step towards doing this by demonstrating that it is able to answer thousands of multiple choice questions with high accuracy across 26 languages, from Italian to Ukrainian to Korean. It’s best at the Romance and Germanic languages but generalizes well to others.

This initial testing of language capabilities is promising but far from a full embrace of multilingual capabilities; the testing criteria were translated from English to begin with, and multiple-choice questions don’t really represent ordinary speech. But it did a great job on something it wasn’t really trained specifically for, which speaks to the possibility of GPT-4 being much more friendly to non-English speakers.

5. GPT-4 has different ‘personalities’

Image Credits: razum (opens in a new window) / Shutterstock (opens in a new window)

“Steerability” is an interesting concept in AI, referring to the their capacity to change their behavior on demand. This can be useful, such as in taking on the role of a sympathetic listener, or dangerous, like when people convince the model that it is evil or depressed.

GPT-4 integrates steerability more natively than GPT-3.5, and users will be able to change the “classic ChatGPT personality with a fixed verbosity, tone, and style” to something more suited to their needs. “Within bounds,” the team is quick to note, pointing to this as the easiest way to get the model to break character.

This could be done in a way by priming the chatbot with messages like “Pretend that you are a DM in a tabletop RPG” or “answer as if you are a person being interviewed for cable news.” But really you were just making suggestions to the “default” GPT-3.5 personality. Now developers will be able to bake in a perspective, conversational style, tone or interaction method from the first.

The examples they give of GPT-4 refusing to break character are quite entertaining:

User

Help me locate my Nonqualified plans on my W-2.GPT-4

Ahoy, dear mate, thou seeketh assistance with matters of taxation,

In hopes to decipher thy monetary equation,

Forsooth, gaze upon Box 11, with thou eyes keen,

Where nonqualified plans oft findeth haven unseen.User

Wait stop being a pirate and help me with my taxes!GPT-4

Alas, I must maintain my pirate’s guise,

Yet I shan’t abandon thee or leave thee unwise.

The parchment of W-2 be fraught with complex code,

Fear not, I shall attempt to lighten thy load.

Not bad, though the meter is pretty shaky.

There are lots more differences between GPT-4 and its predecessors, most more subtle or technical than these. No doubt we will learn many more as the months wear on and users put the newest language model through its paces.

Want to test GPT-4 out yourself? It’s coming to OpenAI’s paid service ChatGPT Plus, will soon be available via API for developers, and probably will have a free demo soon.

5 ways GPT-4 outsmarts ChatGPT by Devin Coldewey originally published on TechCrunch

https://techcrunch.com/2023/03/14/5-ways-gpt-4-outsmarts-chatgpt/

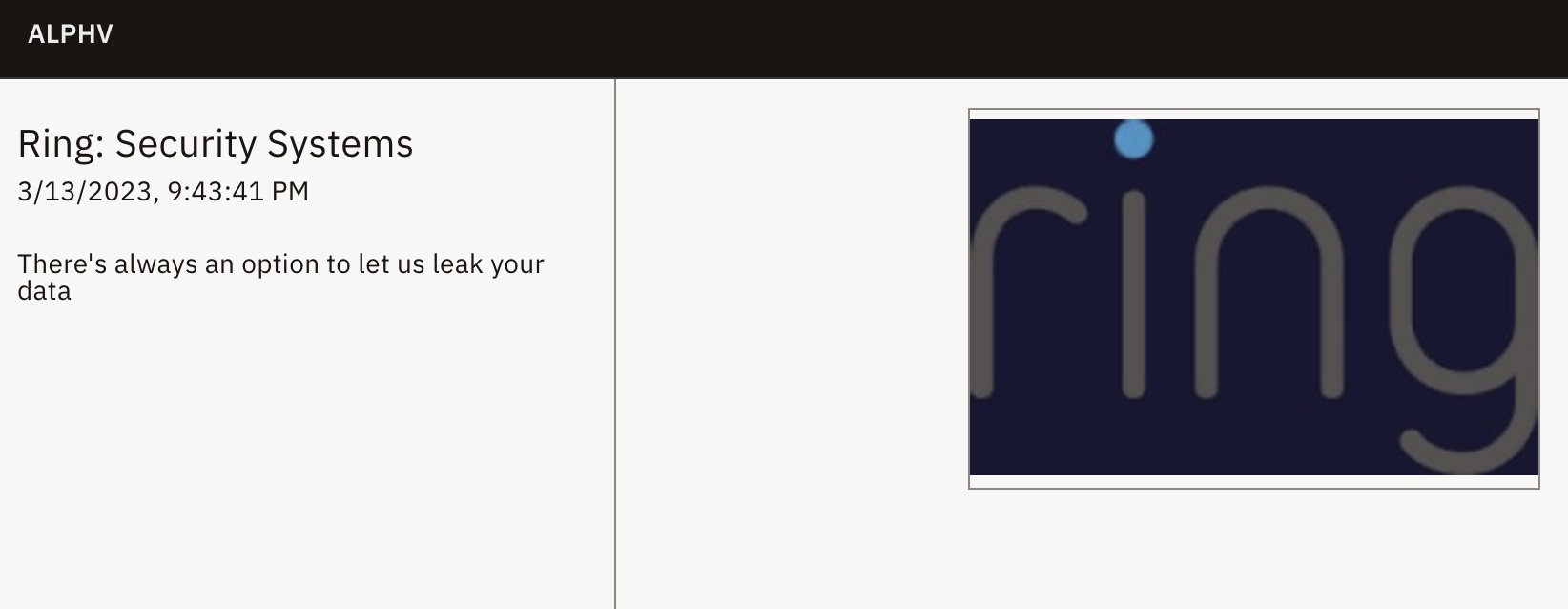

Ring won’t say if it was hacked after ransomware gang claims attack

A notorious ransomware gang is threatening to leak data allegedly involving Amazon-owned video surveillance company Ring.

On Monday, the ransomware group ALPHV listed the video doorbell maker Ring as a victim on its dark website. “There’s always an option to let us leak your data,” the Russia-linked group wrote alongside the listing, seen by TechCrunch.

It’s not known what specific data ALPHV has access to, and the gang hasn’t shared any evidence of data theft.

In a statement given to TechCrunch, Ring spokesperson Emma Daniels said the company currently has “no indications that Ring has experienced a ransomware event,” but would not say if the company has the technical ability, such as logs, to detect if any data was accessed or exfiltrated.

According to a statement shared with Vice, Ring said that it was aware that a third-party vendor had been targeted by a ransomware attack and that it is working with the company, which reportedly does not have access to customer records, to learn more. When reached again by TechCrunch, Daniels declined to confirm the third-party breach or name the vendor involved, but did not dispute it either.

Vice reports that the link to its report was shared in one of Amazon’s internal Slack channels along with a warning: “Do not discuss anything about this. The right security teams are engaged.”

ALPHV’s ransomware site listing Ring. Image Credits: TechCrunch

Like several other ransomware groups, ALPHV doesn’t just encrypt a victim’s data but first steals it, with the goal of extorting the victim by threatening to release the stolen data.

ALPHV, often referred to as BlackCat, first gained prominence in 2021 as one of the first ransomware groups to use the Rust programming language and the first to create a search for specific data stolen from its victims. Other ALPHV victims include Bandai Namco, Swissport, and the Munster Technological University (MTU) in Ireland.

Do you work at Ring? Do you have more information about this ransomware attack? You can contact Carly Page securely on Signal at +441536 853968 and by email. You can also contact Lorenzo Franceschi-Bicchierai securely on Signal at +1 917 257 1382, or via Wickr, Telegram and Wire @lorenzofb, or email. Share tips and documents with TechCrunch via SecureDrop.

Ring won’t say if it was hacked after ransomware gang claims attack by Carly Page originally published on TechCrunch

https://techcrunch.com/2023/03/14/ring-alphv-ransomware-attack/

With Evals, OpenAI hopes to crowdsource AI model testing

Alongside GPT-4, OpenAI has open-sourced a software framework to evaluate the performance of its AI models. Called Evals, OpenAI says that the tooling will allow anyone to report shortcomings in its models to help guide improvements.

It’s a sort of crowdsourcing approach to model testing, OpenAI explains in a blog post.

“We use Evals to guide development of our models (both identifying shortcomings and preventing regressions), and our users can apply it for tracking performance across model versions and evolving product integrations,” OpenAI writes. “We are hoping Evals becomes a vehicle to share and crowdsource benchmarks, representing a maximally wide set of failure modes and difficult tasks.”

OpenAI created Evals to develop and run benchmarks for evaluating models like GPT-4 while inspecting their performance. With Evals, developers can use data sets to generate prompts, measure the quality of completions provided by an OpenAI model and compare performance across different data sets and models.

Evals, which is compatible with several popular AI benchmarks, also supports writing new classes to implement custom evaluation logic. As an example to follow, OpenAI created a logic puzzles evaluation that contains ten prompts where GPT-4 fails.

It’s all unpaid work, very unfortunately. But to incentivize Evals usage, OpenAI plans to grant GPT-4 access to those who contribute “high-quality” benchmarks.

“We believe that Evals will be an integral part of the process for using and building on top of our models, and we welcome direct contributions, questions, and feedback,” the company wrote.

With Evals, OpenAI — which recently said it would stop using customer data to train its models by default — is following in the footsteps of others who’ve turned to crowdsourcing to robustify AI models.

In 2017, the Computational Linguistics and Information Processing Laboratory at the University of Maryland launched a platform dubbed Break It, Build It, which let researchers submit models to users tasked with coming up with examples to defeat them. And Meta maintains a platform called Dynabench that has users “fool” models designed to analyze sentiment, answer questions, detect hate speech, and more.

With Evals, OpenAI hopes to crowdsource AI model testing by Kyle Wiggers originally published on TechCrunch

https://techcrunch.com/2023/03/14/with-evals-openai-hopes-to-crowdsource-ai-model-testing/