Category: TECHNOLOGY

TechCrunch+ roundup: Beyond the Turing Test, 3 VCs on SVB, usage-based pricing tactics

A friend recently asked me to identify a block of ChatGPT text that they’d embedded in an email. I was able to easily, but only because the passage was particularly boring and didn’t sound like them at all.

Although generative AI is exceeding my expectations, the Turing Test is mostly intact in my personal experience. But for how much longer?

Entrepreneur/investor Chris Saad says we need a new benchmark that goes beyond Turing’s “simplistic pass/fail basis,” which is why he developed “a new approach to evaluating AI capabilities based on the Theory of Multiple Intelligences.”

When I moved to San Francisco, the quirky rotunda at 532 Market Street was a Sharper Image store full of plasma balls and tourists trying out massage chairs.

The E*Trade branch that took over the space closed a few years ago, but last August, it got a new tenant: Silicon Valley Bank. Sigh.

Downtown SF hasn’t bounced back from the pandemic, but this is a prime location with lots of foot traffic. Hopefully, after Silicon Valley Bridge Bank winds up its operations, a viable business will move in.

But that’s just one street corner. The second-largest bank failure in U.S. history is going to reshape the startup ecosystem for years to come.

Full TechCrunch+ articles are only available to members

Use discount code TCPLUSROUNDUP to save 20% off a one- or two-year subscription

Silicon Valley Bank was more than just a preferred choice for managing payroll and investor cash: It also offered wealth management services, below-market-rate home loans and helped coordinate private stock sales. It was also a required choice for many clients whose contracts required them to “use the firm for all or most of their banking services,” CNBC reported.

So where does this bank’s collapse leave the tech industry? Who’s most vulnerable, who stands to benefit, and what are some of the long-term implications for VC? To learn more, Karan Bhasin and Ram Iyer interviewed:

- Maëlle Gavet, CEO, Techstars

- Niko Bonatsos, managing director, General Catalyst

- Colin Beirne, partner, Two Sigma Ventures

“We’re probably going to see consolidation in the VC class,” said Gavet.

“It was already on the way, but this is probably going to accelerate it, because SVB was also a preeminent provider of loans for GPs to make their capital commitment polls.”

Thanks very much for reading,

Walter Thompson

Editorial Manager, TechCrunch+

@yourprotagonist

The AI revolution has outgrown the Turing Test: Introducing a new framework

Image Credits: themacx (opens in a new window) / Getty Images (Image has been modified)

A friend recently asked me to identify a block of ChatGPT text that they’d embedded in an email. I was able to easily, but only because the passage was particularly boring and didn’t sound like them at all.

Although generative AI is exceeding my expectations, the Turing Test is mostly intact in my personal experience. But for how much longer?

Entrepreneur/investor Chris Saad says we need a new benchmark that goes beyond Turing’s “simplistic pass/fail basis,” which is why he developed “a new approach to evaluating AI capabilities based on the Theory of Multiple Intelligences.”

Building a PLG motion on top of usage-based pricing

Image Credits: miguelangelortega (opens in a new window) / Getty Images

Last July, Puneet Gupta, a former AWS general manager who’s now CEO and co-founder of Amberflo.io, wrote a TC+ article explaining how SaaS startups can adopt usage-based pricing models.

In a follow-up, he shares four tactics teams can use to gather, analyze and leverage customer data to take the guesswork out of pricing decisions.

“When the time comes to make decisions about product packaging and pricing, the first place you turn to should be the metering pipeline for historical usage data,” he writes.

Time to trust: Questions cybersecurity customers ask and how to answer them

Image Credits: Thomas Hertwig/EyeEm (opens in a new window) / Getty Images

Putting yourself in your customers’ shoes can raise uncomfortable questions, especially for cybersecurity startups, says angel investor Ross Haleliuk.

To help teams shorten the “time to trust” interval, he asks several questions cybersecurity customers are likely to pose while evaluating vendors, along with action items that can help provide convincing answers.

“It is important to keep in mind that trust is built over a long time, but it can be lost in an instant,” writes Haleliuk.

Finding your startup’s valuation: An angel investor explains how

Image Credits: sommart (opens in a new window) / Getty Images

In her latest column, TC+ contributor Marjorie Radlo-Zandi explains how angel investors like herself establish pre- and post-money valuations.

“While assessing prospective investments, I ensure it’s a product or service that I care deeply about and educate myself about the company’s market,” she says.

“I want to see a fair valuation of the business and a well-defined market worth at least $100 million.”

Coming in hot is a great way to cut short an investor meeting. To help first-time founders avoid waving red flags, she breaks down the Berkus Method and explains why uninformed founders often seek unrealistic valuations.

TechCrunch+ roundup: Beyond the Turing Test, 3 VCs on SVB, usage-based pricing tactics by Walter Thompson originally published on TechCrunch

Chaos in US banks could push crypto industry toward decentralization

The crypto industry lost a number of banking on- and off-ramps due to the recent turmoil in the U.S. banking industry, signaling that there may be a shift in the space toward decentralization and a need for regulation going forward.

Last week, Silvergate Capital, Silicon Valley Bank and Signature Bank all shut down or were closed, which resulted in crypto companies and users alike scrambling to move their assets.

“Silvergate and Signature serve as the major on- and off-ramps for the crypto space with their SEN and Signet products, respectively,” Aaron Rafferty, CEO of Standard DAO, said to TechCrunch+. “The tie for SVB was more on the side of major startup and VC capital for the space with organizations like Lightspeed, Y Combinator.”

The shuttering of these banks also has bigger implications on the crypto industry because some of them were providing services to the industry, Mina Tadrus, CEO of quant investment management firm Tadrus Capital LLC and general partner of Tadrus Capital Fund, said. “These banks enabled cryptocurrency traders and companies to deposit, transfer and convert fiat currency into digital assets such as bitcoin, ethereum and other cryptocurrencies.”

With these banks’ closure, it will become difficult for cryptocurrency businesses to move money between entities and access banking services, Tadrus noted. “Furthermore, such closures could mean reduced trust from investors who may no longer be aware of the necessary safeguards involved in their bank transactions.”

This could lead to an overall decline in participation within the crypto community and ultimately could decrease liquidity within crypto markets and make it difficult for crypto startups to build new products or remain operational in the long term, Tadrus added.

Chaos in US banks could push crypto industry toward decentralization by Jacquelyn Melinek originally published on TechCrunch

https://techcrunch.com/2023/03/14/svb-crypto-regulation-decentralization/

Microsoft’s new Bing AI chatbot arrives in the stable version of its Edge web browser

In addition to today’s launch of OpenAI’s GPT-4, which is now confirmed to be the GPT model running in Bing, Microsoft also announced the stable version of its Edge web browser will now include the new Bing AI chatbot in a sidebar. The feature was first introduced at Microsoft’s AI press event in February but was previously only available as a developer preview, not a public release.

With today’s official unveiling of GPT-4, Microsoft is shipping the feature, which it calls the “Edge Copilot” in the stable version of its Microsoft Edge browser.

The update reimagines the concept of the sidebar, which previously hosted Edge’s “Discover” feature to provide users with context about the page they’re visiting. Now, the new sidebar will offer an AI chatbot instead.

When users want to interact with the sidebar and the AI features, they’ll just hover over the Bing icon in the toolbar to open up the sidebar. When not in use, the sidebar can be automatically hidden.

Image Credits: Screenshot of Edge browser

While open, users can take advantage of the Edge Copilot, the AI feature that’s capable of providing “intelligent suggestions and insights based on the context of the web page and the user’s goals,” Microsoft says.

As the company explained at its event, the AI chatbot had two main functionalities at launch, chat and compose. Together, these can help users do things like summarize lengthy web content, run comparisons or even create content, in some cases.

For example, one of Microsoft’s demos had shown the AI summarizing a company’s financial statements — but unfortunately, it got the numbers wrong, it was later revealed. In another, a developer asked the AI to write a snippet of code while researching tips on Stack Overflow. The AI was able to convert Python code to Rust. Microsoft even demonstrated the AI writing a LinkedIn post after a few prompts were given.

Today, the company suggests that the AI Copilot could also be used to help users write better emails, search the web faster and learn new skills.

The productivity angle to AI has not been lost on the competition. Today, Google unveiled how it’s incorporating AI into its own productivity solution, Workspace. Its plan involves incorporating generative AI within every part of Workspace, including helping users write emails in Gmail, write and edit documents in Docs, formula generation in Sheets, capture notes in Meet and create text, images, audio, and video in Slides. Microsoft is expected to detail its answer to Google’s move later this week.

While the sidebar is the most anticipated new Edge feature, it may be locked down by I.T. admins. Microsoft notes admins will be able to control whether or not users have access to the sidebar in Edge at all — an important consideration, given that some companies, including Walmart and Amazon, now have policies against sharing confidential company information with ChatGPT and other AI bots.

The AI sidebar isn’t the only new feature coming to Edge with this release, however.

The company also says it’s rolling out a new experience to the Microsoft 365 tab of the Edge Enterprise New Tab Page. This will now include a larger version of the Microsoft Feed, which includes more productivity content and moves cards with important emails, recent SharePoint sites, upcoming events, and to-do items over to the right-hand side of the tab.

Additionally, the browser will ship various security mode improvements and will support a new policy that controls whether or not the user’s browsing history is deleted when the browser app is exited.

Microsoft says the stable version of its Edge web browser will roll out progressively over one or more days. The browser is available for both Windows and Mac platforms.

Microsoft’s new Bing AI chatbot arrives in the stable version of its Edge web browser by Sarah Perez originally published on TechCrunch

Cruise to begin testing Origin robotaxis in Austin in coming weeks

Cruise, the self-driving unit under GM, is rolling out its custom built Origin robotaxi on Austin’s public streets in the next several weeks, CEO Kyle Vogt said while on stage at SXSW.

The Origin vehicles won’t be accessible to the public — at least for awhile. For now, Cruise will be testing the Origins on public roads in Austin. But Cruise said the vehicles will be open to customers in a “matter of months.”

The first Origin vehicles are already rolling off the production line at GM’s Factory Zero in Detroit and Hamtramck, Michigan.

Last September, Cruise announced plans to launch commercial robotaxi services in Austin, Texas and Phoenix — two hot spots for autonomous vehicle development — before the end of 2022. Those services, using Chevy Bolt vehicles, started with employees and “friends and family” at the end of the year. Cruise wouldn’t share the exact size of its fleet. There about 300 in the global fleet, most of which are in San Francisco. Dozens are in Austin.

Commercial services are expected to open to the public later this year.

The Cruise Origin, the product of a multi-year collaboration with parent company GM and investor Honda that is designed for a ridesharing service, was first unveiled in January 2020.

The shuttle-like vehicle — branded with Cruise’s trademark orange and black colors — has no steering wheel or pedals and is designed to travel at highway speeds. The interior is roomy, with seats that face each other, similar to what a traveler might find on some trains.

Cruise to begin testing Origin robotaxis in Austin in coming weeks by Kirsten Korosec originally published on TechCrunch

5 ways GPT-4 outsmarts ChatGPT

“Steerability” is an interesting concept in AI, referring to the their capacity to change their behavior on demand. This can be useful, such as in taking on the role of a sympathetic listener, or dangerous, like when people convince the model that it is evil or depressed.

GPT-4 integrates steerability more natively than GPT-3.5, and users will be able to change the “classic ChatGPT personality with a fixed verbosity, tone, and style” to something more suited to their needs. “Within bounds,” the team is quick to note, pointing to this as the easiest way to get the model to break character.

This could be done in a way by priming the chatbot with messages like “Pretend that you are a DM in a tabletop RPG” or “answer as if you are a person being interviewed for cable news.” But really you were just making suggestions to the “default” GPT-3.5 personality. Now developers will be able to bake in a perspective, conversational style, tone or interaction method from the first.

The examples they give of GPT-4 refusing to break character are quite entertaining:

User

Help me locate my Nonqualified plans on my W-2.GPT-4

Ahoy, dear mate, thou seeketh assistance with matters of taxation,

In hopes to decipher thy monetary equation,

Forsooth, gaze upon Box 11, with thou eyes keen,

Where nonqualified plans oft findeth haven unseen.User

Wait stop being a pirate and help me with my taxes!GPT-4

Alas, I must maintain my pirate’s guise,

Yet I shan’t abandon thee or leave thee unwise.

The parchment of W-2 be fraught with complex code,

Fear not, I shall attempt to lighten thy load.

Not bad, though the meter is pretty shaky.

There are lots more differences between GPT-4 and its predecessors, most more subtle or technical than these. No doubt we will learn many more as the months wear on and users put the newest language model through its paces.

Want to test GPT-4 out yourself? It’s coming to OpenAI’s paid service ChatGPT Plus, will soon be available via API for developers, and probably will have a free demo soon.

OpenAI’s new GPT-4 AI model has made its big debut, and is already powering everything from a virtual volunteer for the visually impaired to an improved language learning bot in Duolingo. But what sets GPT-4 apart from previous versions like ChatGPT and GPT-3.5? Here are the 5 biggest differences between these popular systems.

First, though, what’s in a name? Although ChatGPT was originally described as being GPT-3.5 (and therefore a few iterations beyond GPT-3), it is not itself a version of OpenAI’s large language model, but rather a chat-based interface for whatever model powers it. The ChatGPT system that exploded in popularity over the last few months was a way to interact with GPT-3.5, and now it’s a way to interact with GPT-4.

With that said, let’s get into the differences between the chatbot you know and love and its newly augmented successor.

1. GPT-4 can see and understand images

The most noticeable change to this versatile machine learning system is that it is “multimodal,” meaning it can understand more than one “modality” of information. ChatGPT and GPT-3 were limited to text: they could read and write but that was about it (though more than enough for many applications).

GPT-4, however, can be given images and it will process them to find relevant information. You could simply ask it to describe what’s in a picture, of course, but importantly its understanding goes beyond that. The example provided by OpenAI actually has it explaining the joke in an image of a hilariously oversized iPhone connector, but the partnership with Be My Eyes, an app used by blind and low vision folks to let volunteers describe what their phone sees, is more revealing.

Image Credits: Be My Eyes

In the video for Be My Eyes, GPT-4 describes the pattern on a dress, identifies a plant, explains how to get to a certain machine at the gym, translates a label (and offers a recipe), reads a map, and performs a number of other tasks that show it really gets what is in an image — if it’s asked the right questions. It knows what the dress looks like, but it might not know if it’s the right outfit for your interview.

2. GPT-4 is harder to trick

For all that today’s chat bots get right, they tend to be easily led astray. A little coaxing can persuade them that they are simply explaining what a “bad AI” would do, or some other little fiction that lets the model say all kinds of weird and frankly unnerving things. People even collaborate on “jailbreak” prompts that quickly let ChatGPT and others out of their pens.

GPT-4, on the other hand, has been trained on lots and lots of malicious prompts — which users helpfully gave OpenAI over the last year or two. With these in mind, the new model is much better than its predecessors on “factuality, steerability, and refusing to go outside of guardrails.”

The way OpenAI describes it, GPT-3.5 (which powered ChatGPT) was a “test run” of a new training architecture, and they applied the lessons from that to the new version, which was “unprecedentedly stable.” They also were better able to predict its capabilities, which makes for fewer surprises.

3. GPT-4 has a longer memory

These large language models are trained on millions of web pages, books, and other text data, but when they’re actually having a conversation with a user, there’s a limit to how much they can keep “in mind,” as it were (One sympathizes). That limit with GPT-3.5 and the old version of ChatGPT was 4,096 “tokens,” which is around 8,000 words, or roughly 4-5 pages of a book. So it would sort of lose track of things after they passed that far “back” in its attention function.

GPT-4 has a maximum token count of 32,768 — that’s 2^15, if you’re wondering why the number looks familiar. That translates to around 64,000 words or 50 pages of text, enough for an entire play or short story.

What this means is that in conversation or in generating text, it will be able to keep up to 50 pages or so in mind. So it will remember what you talked about 20 pages of chat back, or it may in writing a story or essay refer to events that occurred 35 pages ago. That’s a very approximate description of how the attention mechanism and token count work, but the general idea is of expanded memory and the capabilities that accompany it.

4. GPT-4 is more multilingual

The AI world is dominated by English speakers, and everything from data to testing to research papers are in that language. But of course the capabilities of large language models are applicable in any written language and ought to be made available in those.

GPT-4 takes a step towards doing this by demonstrating that it is able to answer thousands of multiple choice questions with high accuracy across 26 languages, from Italian to Ukrainian to Korean. It’s best at the Romance and Germanic languages but generalizes well to others.

This initial testing of language capabilities is promising but far from a full embrace of multilingual capabilities; the testing criteria were translated from English to begin with, and multiple-choice questions don’t really represent ordinary speech. But it did a great job on something it wasn’t really trained specifically for, which speaks to the possibility of GPT-4 being much more friendly to non-English speakers.

5. GPT-4 has different ‘personalities’

Image Credits: razum (opens in a new window) / Shutterstock (opens in a new window)

“Steerability” is an interesting concept in AI, referring to the their capacity to change their behavior on demand. This can be useful, such as in taking on the role of a sympathetic listener, or dangerous, like when people convince the model that it is evil or depressed.

GPT-4 integrates steerability more natively than GPT-3.5, and users will be able to change the “classic ChatGPT personality with a fixed verbosity, tone, and style” to something more suited to their needs. “Within bounds,” the team is quick to note, pointing to this as the easiest way to get the model to break character.

This could be done in a way by priming the chatbot with messages like “Pretend that you are a DM in a tabletop RPG” or “answer as if you are a person being interviewed for cable news.” But really you were just making suggestions to the “default” GPT-3.5 personality. Now developers will be able to bake in a perspective, conversational style, tone or interaction method from the first.

The examples they give of GPT-4 refusing to break character are quite entertaining:

User

Help me locate my Nonqualified plans on my W-2.GPT-4

Ahoy, dear mate, thou seeketh assistance with matters of taxation,

In hopes to decipher thy monetary equation,

Forsooth, gaze upon Box 11, with thou eyes keen,

Where nonqualified plans oft findeth haven unseen.User

Wait stop being a pirate and help me with my taxes!GPT-4

Alas, I must maintain my pirate’s guise,

Yet I shan’t abandon thee or leave thee unwise.

The parchment of W-2 be fraught with complex code,

Fear not, I shall attempt to lighten thy load.

Not bad, though the meter is pretty shaky.

There are lots more differences between GPT-4 and its predecessors, most more subtle or technical than these. No doubt we will learn many more as the months wear on and users put the newest language model through its paces.

Want to test GPT-4 out yourself? It’s coming to OpenAI’s paid service ChatGPT Plus, will soon be available via API for developers, and probably will have a free demo soon.

5 ways GPT-4 outsmarts ChatGPT by Devin Coldewey originally published on TechCrunch

https://techcrunch.com/2023/03/14/5-ways-gpt-4-outsmarts-chatgpt/

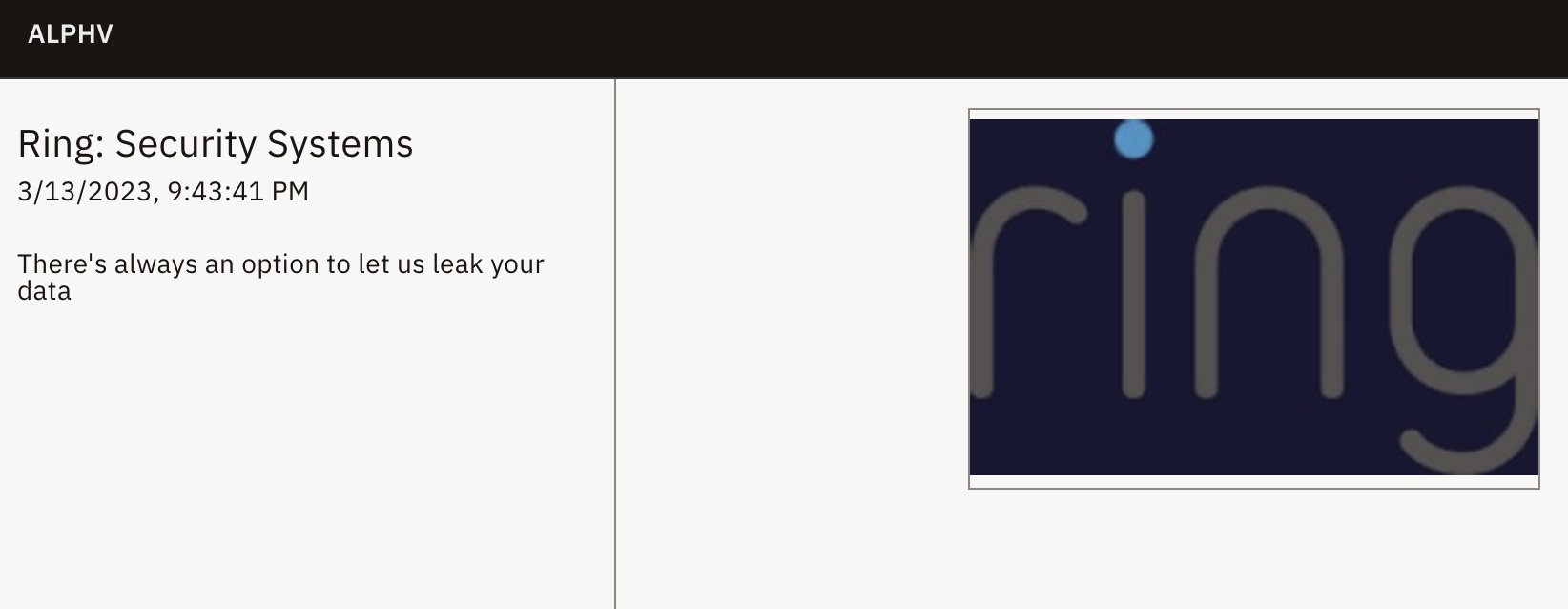

Ring won’t say if it was hacked after ransomware gang claims attack

A notorious ransomware gang is threatening to leak data allegedly involving Amazon-owned video surveillance company Ring.

On Monday, the ransomware group ALPHV listed the video doorbell maker Ring as a victim on its dark website. “There’s always an option to let us leak your data,” the Russia-linked group wrote alongside the listing, seen by TechCrunch.

It’s not known what specific data ALPHV has access to, and the gang hasn’t shared any evidence of data theft.

In a statement given to TechCrunch, Ring spokesperson Emma Daniels said the company currently has “no indications that Ring has experienced a ransomware event,” but would not say if the company has the technical ability, such as logs, to detect if any data was accessed or exfiltrated.

According to a statement shared with Vice, Ring said that it was aware that a third-party vendor had been targeted by a ransomware attack and that it is working with the company, which reportedly does not have access to customer records, to learn more. When reached again by TechCrunch, Daniels declined to confirm the third-party breach or name the vendor involved, but did not dispute it either.

Vice reports that the link to its report was shared in one of Amazon’s internal Slack channels along with a warning: “Do not discuss anything about this. The right security teams are engaged.”

ALPHV’s ransomware site listing Ring. Image Credits: TechCrunch

Like several other ransomware groups, ALPHV doesn’t just encrypt a victim’s data but first steals it, with the goal of extorting the victim by threatening to release the stolen data.

ALPHV, often referred to as BlackCat, first gained prominence in 2021 as one of the first ransomware groups to use the Rust programming language and the first to create a search for specific data stolen from its victims. Other ALPHV victims include Bandai Namco, Swissport, and the Munster Technological University (MTU) in Ireland.

Do you work at Ring? Do you have more information about this ransomware attack? You can contact Carly Page securely on Signal at +441536 853968 and by email. You can also contact Lorenzo Franceschi-Bicchierai securely on Signal at +1 917 257 1382, or via Wickr, Telegram and Wire @lorenzofb, or email. Share tips and documents with TechCrunch via SecureDrop.

Ring won’t say if it was hacked after ransomware gang claims attack by Carly Page originally published on TechCrunch

https://techcrunch.com/2023/03/14/ring-alphv-ransomware-attack/

Rising interest rates are putting VCs back in their lanes

Amid the Silicon Valley Bank-led chaos of the last few days, Y Combinator made an intriguing announcement: It’s pivoting away from late-stage investing. It won’t be the last venture group to pull back from an expansion of its traditional investing remit.

During the COVID-19 pandemic’s first years, the pace at which venture capital firms could raise money expanded. PitchBook data indicates that U.S. venture capitalists saw their capital inflows more than double from $23.5 billion in 2012 to $51.4 billion in 2016. But investing cohort was just getting started: It further bolstered its fundraising from $60.5 billion in 2018 to $154.1 billion in 2021 and $162.6 billion in 2022.

Data visualization by Miranda Halpern, created with Flourish

The Exchange explores startups, markets and money.

Read it every morning on TechCrunch+ or get The Exchange newsletter every Saturday.

This dramatic rise in capital available to venture investors had a number of interesting impacts on the startup market. First, startups were able to raise more, more quickly, more frequently. Some startups raised two or even three times in a year. This led to dramatic markups and paper valuations that today no longer align with reality.

Another result of the boom in venture fundraising was the ability of firms to expand their investing footprint. One trend that lasted for years during the final stage of the last startup boom was late-stage investors going early-stage. The logic here was that if large venture funds could invest a round or two earlier, they could get far greater ownership of great companies at a lower average per-share cost. This, in time, would lead to simply nasty returns.

To pick some examples of this effort, recall that Andreessen Horowitz, a firm that has raised 12 funds with more than $1 billion in them during its life, per Crunchbase data, announced a seed effort in 2021 and a pre-seed endeavor in 2022.

Rising interest rates are putting VCs back in their lanes by Alex Wilhelm originally published on TechCrunch

https://techcrunch.com/2023/03/14/y-combinator-late-stage-investing-interest-rates/

Nabla, a digital health startup, launches Copilot, using GPT-3 to turn patient conversations into action

Healthcare has been pegged as a prime candidate for more AI applications — both to aid in clinical work and to lighten some of the more time-consuming administrative burdens that come around clinical care. Now, Nabla, the digital health startup out of Paris co-founded by AI entrepreneur Alexandre Lebrun, claims to be the first to build a tool using GPT-3 to help physicians do their work — more specifically, their paperwork.

Copilot, as Nabla’s new service is called, is launching today as a digital assistant for doctors accessed initially as a Chrome extension to help transcribe and repurpose information from video conversations, with plans for an in-person consultation tool to launch in a few weeks.

Following along as doctors see patients, Copilot automatically translates those conversations into different document-based endpoints — eg, prescriptions, follow up appointment letters, consultation summaries — that typically result from those meetings. It’s based around GPT-3, the language model built by OpenAI that is used to generate human text, which is powering hundreds of applications, including ChatGPT from OpenAI itself.

Nabla was one of the first companies to experiment with GPT-3 when it was released in 2020. While Nabla is currently using GPT-3 (as a paying customer) as the basis of Copilot, Lebrun tells me that the longer term goal, approaching fast, is to build its own large language model customized to the particular language and needs in medicine and healthcare, to power Copilot, whatever else Nabla builds in future, and potentially applications for others, too.

The early version already has some traction, the startup says: it’s in use by practitioners in the U.S. and France, as well as around 20 digital and in-person clinics “with significant medical teams.”

The jury is still out on what large-scale, long-term uses we’ll see for generative AI technologies — and whether they and the large language models that power them will provide net benefits or net losses to our world; and whether they will make any money in the process.

In the meantime, healthcare has been one of the big industries that people have been watching with interest to see how it responds to these developments, roughly down two corridors of development. First, where it could be used for clinical assistance, for example as described in this piece co-authored by Harvard Medical School doctors and academics on using ChatGPT to diagnose patients; and second, in automating for more repetitive functions, as illustrated in this Lancet piece on the future of discharge summaries.

A lot of that work is still very much in its early stages, not least because healthcare is particularly sensitive.

“With all large language models, there is a risk,” Lebrun said in an interview. “It’s incredibly powerful, but five percent of the time it will be completely wrong and you have no way to control that. But in healthcare we [literally] can’t live with a 5% error rate.”

Yet in many regards, healthcare seems like a prime area to be infused with AI: clinicians are oversubscribed with patients and burned out; globally we are facing a chronic shortage of doctors partly as a result of so many leaving the profession, and partly because of the work demanded of them. On top of seeing patients, they have to dedicate time to being administrators, with a lot of very specific and formal pieces of documentation to get through to record appointment data and plan what comes next demanded both by rules and regulations, but also patients themselves. Alongside all this, there are sometimes unfortunately instances of human error.

On the other side, though, a number of steps in medical care have already been digitized, paving the way for patients and clinicians being more open to using more digital tools to help with the rest.

That thinking was in part what motivated Alexandre LeBrun to start Nabla in the first place, and to target Copilot specifically first at helping physicians with administrative tasks — not examining or counseling patients, or other clinical work.

LeBrun has a history in building language-based applications. In 2013, he sold his startup VirtuOz, described back then as the “Siri for enterprise”, to Nuance to spearhead its development of digital assistant tech for businesses. He then founded and eventually sold his next startup, Wit.ai, to Facebook, where he and his team then worked on the social network’s foray into chatbots in Messenger. He then put in time at FAIR, Facebook’s AI research centre in Paris.

Those early tools for enterprises to interact with customers were largely pitched as marketing and customer loyalty aids, but Lebrun believed they could be applied in less fuzzy scenarios, too.

“We could already see, in 2018, how much time doctors were spending updating patient records, and we started to think that we could bring AI technology and [advanced] machine learning to healthcare in particular to help with that,” Lebrun said.

Interestingly, Lebrun didn’t mention this to me, but he would have made that observation at the same time that RPA, robotic process automation, was picking up momentum in the market.

RPA really brought automation in the enterprise to the front of people’s minds. But providing assistance to doctors in live consultations is a more complex matter than mechanising rote work. With a relatively finite set of language and subject variables at play in a doctor-patient consultation, it became an ideal scenario for an AI-based assistant to help.

Lebrun discussed the idea with Yann LeCun, who was his boss at the time and is still Facebook’s chief AI research scientist. LeCun endorsed his thinking, so Lebrun left, and LeCun became one of the the first investors in Nabla.

It took a couple more years for Nabla to disclose that and other funding — it’s raised nearly $23 million — which the startup held off announcing to coincide with its first product. That was a health Q&A “super app” for women that let them track different health-related questions, combine that information with other data, and appeared designed mainly as a vehicle to help it figure out what people were looking for in remote health interactions, and what could be built out of that.

This was followed up last year with a more generalized “health tech stack for patient engagement” which is interesting in that it played a little on the central metric of Lebrun’s earlier products: engagement.

You might be somewhat skeptical of a startup, aiming to fix something broken in healthcare, with no medical professionals among its founders: in addition to Lebrun, the other two are COO Delphine Groll, who previously led business development and communications for media groups; and CTO Martin Raison, who has worked with Lebrun since Wit.ai.

That was a sticking point for Lebrun, too, who told me he considered putting the venture on pause in its early days to go to medical school himself.

He opted not to, and to draw instead on feedback and information from doctors and other clinicians, and to hire them to work with the startup to help steer its roadmap, which is how it has now arrived at today’s standalone product, Copilot.

“Nabla Copilot is designed for clinicians who want to be on the cutting edge of medicine,” said Jay Parkinson, MD, MPH, and Chief Medical Officer at Nabla, in a statement. “As a physician, I know that doctors are always short on time and have better things to do than fill out the [electronic health record]. With Nabla’s super-powered clinical notes, doctors can now look their patients in the eye throughout the consultation, and make sure they remember every word they say by sending the encounter summary.” Parkinson, who joined the startup recently, is an entrepreneur himself, with his telehealth startup Sherpaa Health acquired by Crossover.

While improving AI has generally come to be predicated on ingesting ever-more information to train, that’s been a tricky part of the building of Copilot. The company has data-sharing opt-in throughout, with no data ever stored on its servers, as well as HIPAA and GDPR compliant. Those who do agree to share training information will have their data run through “pseudonymisation algorithms” built in house. And for now, there are no plans to build clinical assistants: no diagnoses suggestions, or anything else like it.

Lebrun said that was easier said than done. Nabla’s AI, while it was being built, kept trying to provide diagnostics automatically to its users, even when the engineers didn’t ask it to and tried to get it not to, Lebrun said.

“We don’t want to overstep and do diagnostics,” he said, “so we had to train our AI not to do that.”

That might be something, “a different product”, in the distant future, he said, but a lot more development, and fool-proofing would need to be achieved first.

“We don’t believe in chatbots for medicine,” he added. “We want to make doctors’ lives better by saving them time.”

Nabla, a digital health startup, launches Copilot, using GPT-3 to turn patient conversations into action by Ingrid Lunden originally published on TechCrunch

Apple launches a new way to shop online for iPhones with help from a live specialist

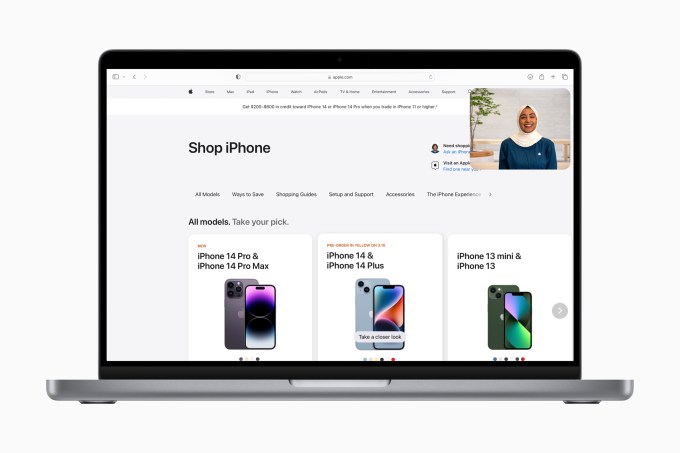

Apple today is announcing a new way to shop for iPhones. The company is introducing a new service, “Shop with a Specialist,” that will offer customers in the U.S. a live video shopping experience directly on the apple.com website. With the free service, customers will be able to browse the latest iPhone models, compare features, and have an Apple Specialist answer their questions and help them find the best deal through the Apple Trade In program or their carrier.

Customers can also learn more about the iPhone’s specific features, switching to iOS in general, and can get help comparing the different phones, colors, sizes, and more, Apple says. The service is going live starting today just as the new yellow iPhone 14 and iPhone 14 Plus go on sale.

Though live video shopping has struggled to take off in the U.S., with even Facebook and Instagram recently exiting the market, the move to put Apple retail specialists online is part of a larger shift at the company to direct more consumers to its e-commerce website to complete their sales. These days, it’s no longer the norm for customers to line up at Apple stores on the iPhone launch day, as many consumers now shop and place pre-orders online. But without going into the store, Apple is losing out on one of its key specialties — the high-touch customer service its retail specialists provide.

Apple says its new live shopping experience will be available on its Apple.com store website at apple.com/shop/buy-iphone and will be open every day between the hours of 7 AM to 7 PM PT in the U.S. During the live video session, the Apple specialist will be on camera and sharing their screen, but they won’t be able to see the customer. They’re able to help with a range of questions, from tech specs to deals to the various financing options Apple offers, and more. At launch, U.S. customers will be able to get an up to $600 credit toward the new iPhone 14 if they trade in an iPhone 11 or later through the new service, or from the Apple Store app or an Apple Store location.

The new service expands on other existing Apple customer support technologies, which include

Image Credits: Apple

The launch arrives at a critical time for smartphone makers as new reports indicated that last year’s sales were the lowest in nearly a decade, or 11% below 2021. Though Apple held the top spot in the fourth quarter in terms of shipments, Samsung beat it for the year with 257.9 million shipments and a 22% market share, versus Apple’s 232.2 million and 19% share.

In addition, Apple ended the fourth quarter with its first-ever double-digit decline in sales caused by Covid restrictions on its Chinese manufacturing facilities and weaker demand, the same report by Canalys said. It’s no surprise then that Apple is now not only juicing sales with the introduction of a new color iPhone in the middle of the iPhone 14’s selling cycle, but also with a new way to shop for iPhone.

Further down the road, we understand Apple will expand the Shop with a Specialist to its other flagship products beyond the iPhone.

Apple launches a new way to shop online for iPhones with help from a live specialist by Sarah Perez originally published on TechCrunch

UK gov’t asks National Cyber Security Centre to review TikTok

The UK government has asked the National Cyber Security Centre (NCSC) to review TikTok in a move that could prefigure a ban on the app on government devices.

Speaking to Sky News, security minister Tom Tugendhat said the government has asked the NCSC to look into the popular video sharing app — telling the broadcaster he did not rule out a ban but wanted to wait for the Centre’s review to conclude. “Understanding exactly what the challenges that these apps pose, what they are asking for and how they’re reaching into our lives is incredibly important,” he said.

The development follows a report in the Sunday Times at the weekend which suggested a ban is incoming. The newspaper suggested TikTok is set to be banned from all government devices — after initial security assessments had raised concerns about the safety of sensitive data.

We’ve reached out to the Cabinet Office about the NCSC review and the reports of a ban incoming and will update this report if we get a response. Update: A spokesman declined further comment, saying only: “All departments have robust processes in place to ensure government IT devices are secure, including managing risks from third party applications.”

TikTok was also contacted for comment. A company spokesperson said:

While we await details of any specific concerns the UK government may have, we would be disappointed by such a move. Similar decisions elsewhere have been based on misplaced fears and seemingly driven by wider geopolitics, but we remain committed to working with the government to address any concerns. We have begun implementing a comprehensive plan to further protect our European user data, which includes storing UK user data in our European data centres and tightening data access controls, including third-party independent oversight of our approach.

Concerns about security and the privacy of TikTok user data — along with worries that the video-sharing platform’s algorithm-driven content feed might be being used as a conduit for Chinese Community Party propaganda or to run state-backed information operations seeking to manipulate public opinion in the West — have led to a series of bans on the China-owned app by other governments and public institutions in recent months — including the European Commission, the Belgian federal government and the US House of Representatives.

Back in mid 2020, the Indian government went even further — banning TikTok and a swathe of other Chinese made apps, meaning citizens can’t even download them for personal use — saying it was taking the step over concerns the software posed a risk to national security and to “the sovereignty and integrity of India”, as it put it at the time.

Former US president Donald Trump also caused headaches for TikTok later the same year — when he inked an executive order banning transactions with ByteDance, the parent company of TikTok, and also sought to ban the app from the US.

The Trump TikTok ban ended up stalled and a subsequent attempt by him to force the Chinese company to sell TikTok’s US operations to Oracle was also shelved. But while the next US president, Joe Biden, went on to revoke the Trump administration’s TikTok actions he also signed a new order requiring the Commerce Department to review apps with ties to “jurisdiction of foreign adversaries” that may pose national security risks — so US attention on TikTok remains.

The company has responded to Western security concerns by announcing a number of major data localization infrastructure programs.

Last year it claimed all US users data had been moved to Oracle servers in the US. Similar moves in the EU are ongoing — meaning EU users’ data hasn’t yet been ‘localized’ — but TikTok recently dialled up its regional PR efforts, saying it will layer new data access and control processes on top of locally stored data, along with a promise to engage an external auditor, in a bid to counter concerns that employees outside the bloc can still access European TikTok user data.

In parallel, the overarching question of the legality of TikTok’s regional data exports remain under investigation by EU data protection authorities.

The social media platform is facing further bad press in the region today: A report in the Financial Times contains allegations the company mishandled claims of sexual harassment against a senior manager at its London office. Five former employees told the newspaper they had experienced or personally witnessed sexual harassment at the organisation in its UK and European offices.

In a statement responding to the FT’s report, a TikTok spokesperson said:

“Harassment of any kind in our workplace is completely unacceptable and will be met with the strongest form of disciplinary action possible. We’re fully confident in the rigour of our process for surfacing, investigating and taking action on any and all complaints of this nature.”

UK gov’t asks National Cyber Security Centre to review TikTok by Natasha Lomas originally published on TechCrunch

https://techcrunch.com/2023/03/14/tiktok-uk-ncsc-security-review/