Category: TECHNOLOGY

Teenage Engineering teases the tape recorder of my dreams, only it’s digital

The kooky geniuses at Teenage Engineering are back with a new gadget that is guaranteed to make you salivate until its price tag smacks you back to reality.

The maker of drool-worthy synthesizers just announced the TP-7, a teeny portable recorder that features a “motorized tape reel,” which spins as it captures audio and also functions like a click wheel.

Teenage Engineering imbued this digital recorder with layers of nostalgia, evoking both the early-ish days of digital (like Apple’s original iPod, Gizmodo points out) and the yet-more-distant days of tape. The TP-7 also seems to pull from some iconic camera designs; its recording indicator beams like Leica’s red dot, while its leather back seems to draw from Polaroid’s classic SX-70.

In other words: The Stockholm-based audio company is teasing yet another gorgeous, totally overbuilt and arguably unnecessary gadget. It’s $1,499 and “coming this summer.”

I want one desperately.

Okay, okay, I’ll focus — promise.

The TP-7 also features record, play and stop buttons, a fast-forward/rewind trigger, 128 GB of storage, and an internal mic and speaker. The device also supports up to three external mics (via 3.5mm audio jacks) and it can connect to an iPhone or laptop via USB-C or bluetooth. Teenage Engineering says its battery lasts around seven hours.

The TP-7 is part of Teenage Engineering’s high-end “Field System” collection, alongside the OP-1 music maker and CM-15 mic. But if you want a taste of Teenage Engineering’s quirky gear without the prohibitive price tag, you can still peep its pocket synths, which are largely “sold out” on its website but typically cost just north of $100 on sites like Reverb and eBay.

Better yet, nostalgia-chasers who long for the days of cassettes can also simply opt for a bonafide shoebox or multi-track tape recorder.

Teenage Engineering teases the tape recorder of my dreams, only it’s digital by Harri Weber originally published on TechCrunch

https://techcrunch.com/2023/05/11/teenage-engineering-tp-7-digital-tape-recorder-of-my-dreams/

Hands on with Google’s AI-powered music generator

Can AI work backward from a text description to generate a coherent song? That’s the premise of MusicLM, the AI-powered music creation tool Google released yesterday during the kickoff of its I/O conference.

MusicLM, which was trained on hundreds of thousands of hours of audio to learn to create new music in a range of styles, is available in preview via Google’s AI Test Kitchen app. I’ve been playing around with it for the past day or so, as have a few of my colleagues.

The verdict? Let’s just say MusicLM isn’t coming for musicians’ jobs anytime soon.

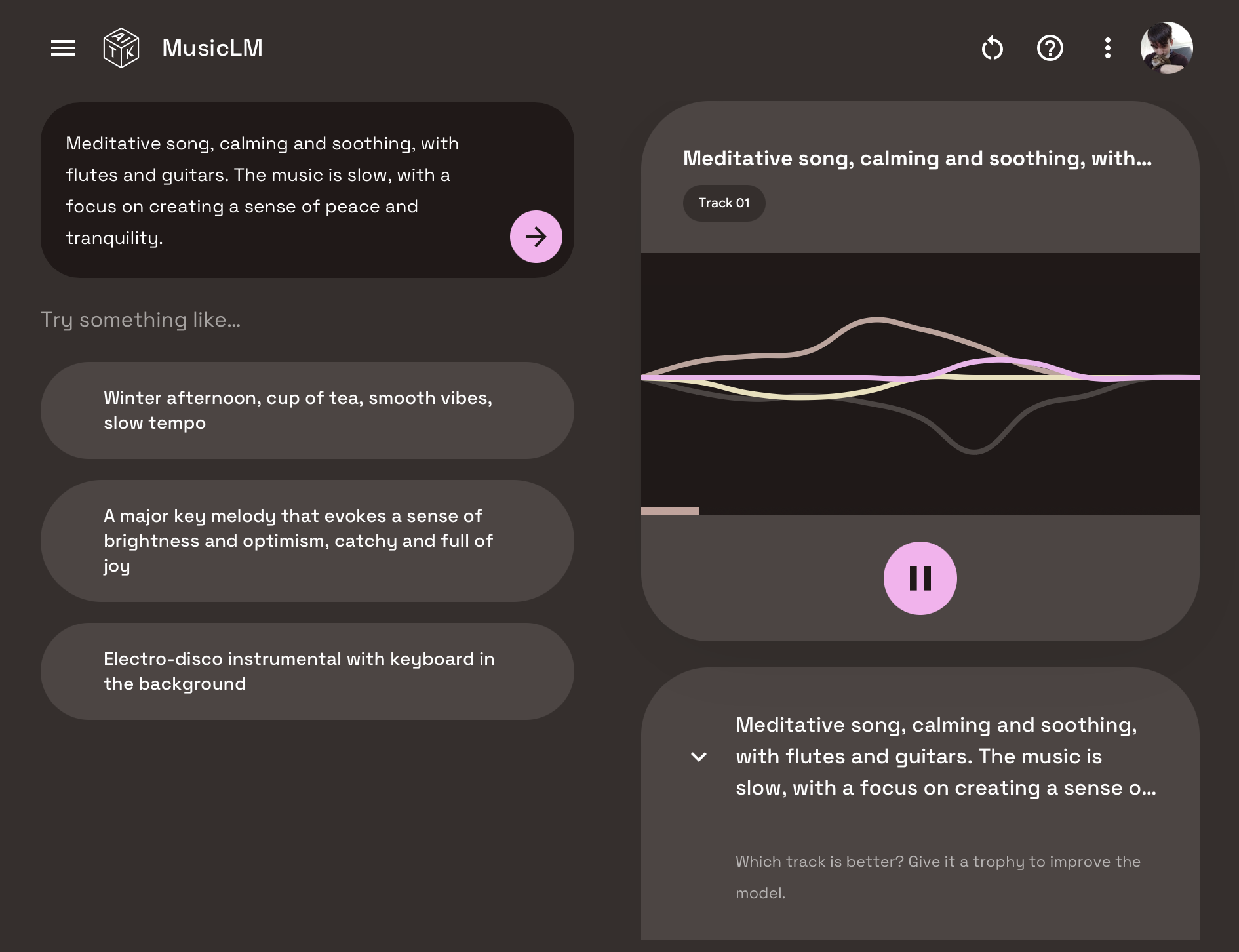

Using MusicLM in Test Kitchen is pretty straightforward. Once you’re approved for access, you’re greeted with a text box where you can enter a song description — as detailed as you like — and have the system generate two versions of the song. Both can be downloaded for offline listening, but Google encourages you to “thumbs up” one of the tracks to help improve the AI’s performance.

Image Credits: Google

When I first covered MusicLM in January, before it was released, I wrote that the system’s songs sounded something like a human artist might compose — albeit not necessarily as musically inventive or cohesive. Now, I can’t say I entirely stand by those words, as it seems clear that there was some serious cherry-picking going on with samples from earlier in the year.

Most songs I’ve generated with MusicLM sound passable at best — and at worst like a four-year-old let loose on a DAW. I’ve mostly stuck to EDM, trying to yield something with structure and a discernible (plus pleasant, ideally) melody. But no matter how decent — even good! — the beginning of MusicLM’s songs sounds, there comes a moment when they break down in a very obvious, musically unpleasing way.

For example, take this sample, generated using the prompt “EDM song in a light, upbeat and airy style, good for dancing.” It starts off promising, with head-bobbing baseline and elements of a classic Daft Punk single. But toward the middle of the track, it veers wayyyyy off course — practically another genre.

Here’s a piano solo from a simpler prompt — “romantic and emotional piano music.” Parts, you’ll notice, sound well and fine — exceptional even, at least in terms of the finger work. But then it’s as if the pianist becomes possessed by mania. A jumble of notes later, and the song takes on a radically different direction, as if from new sheet music — albeit along the lines of the original.

I tried MusicLM’s hand at chiptunes for the heck of it, figuring the AI might have an easier time with songs of a more basic construction. No dice. The result (below), while catchy in parts, ended just as randomly as the other samples.

On the plus side, MusicLM, on the whole, does a much better job than Jukebox, OpenAI’s attempt several years ago at creating an AI music generator. In contrast to MusicLM, the songs Jukebox produced lacked typical musical elements like choruses that repeat and often contained nonsense lyrics. MusicLM-produced songs contain fewer artifacts, as well, and generally feel like a step up where it concerns fidelity.

The emergence of Dance Diffusion comes several years after OpenAI, the San Francisco-based lab behind DALL-E 2, detailed its grand experiment with music generation, dubbed Jukebox. Given a genre, artist and a snippet of lyrics, Jukebox could generate relatively coherent music complete with vocals. But the songs Jukebox produced lacked larger musical structures like choruses that repeat and often contained nonsense lyrics.

MusicLM’s usefulness is a bit limited besides, thanks to artificial limitations on the prompting side. It won’t generate music featuring artists or vocals, not even in the style of particular musicians. Try typing a prompt like “along the lines of Barry Manilow” and you’ll get nothing but an error message.

Image Credits: Google

The reason’s likely legal. Deepfaked music stands on murky legal ground, after all, with some in the music industry arguing that AI music generators like MusicLM violate music copyright. It might not be long before there’s some clarity on the matter —several lawsuits making their way through the courts will likely have a bearing on music-generating AI, including one pertaining to the rights of artists whose work is used to train AI systems without their knowledge or consent. Time will tell.

Hands on with Google’s AI-powered music generator by Kyle Wiggers originally published on TechCrunch

https://techcrunch.com/2023/05/11/hands-on-with-googles-ai-powered-music-generator/

Pudgy Penguin’s $9M seed round could point to a growing NFT industry

Welcome back to Chain Reaction.

Earlier this week, Pudgy Penguins, an NFT collection that also doubles as a web3 IP company, raised $9 million for its seed round.

Why does this matter?

Pudgy Penguins isn’t the first NFT-focused company or collection to raise money. However, its seed round is another indication of the growing digital asset subsector and how projects that may seemingly be interpreted as just a collection of profile pictures (PFPs) can be turned into something more.

For context: Last September, Doodles raised $54 million at a $704 million valuation in a round led by Reddit co-founder Alexis Ohanian’s VC firm Seven Seven Six. And in March 2022, Yuga Labs, the company behind three blue-chip NFT collections, raised $450 million at a $4 billion valuation.

Pudgy Penguins, which reminds me of Club Penguin, was launched in 2021 and acquired by Los Angeles-based entrepreneur and web3 enthusiast Luca Netz in April 2022. Since then, it has expanded from 8,888 NFTs to a suite of real-life products and experiences providing collection owners live events, physical merchandise and licensing opportunities.

The collection’s round was led by 1kx, an early-stage investment firm, and saw participation from Big Brain Holdings, Kronos Research, the founders of LayerZero Labs, Old Fashion Research and CRIT Ventures. The funds will be used to scale its intellectual property, its team and to “enhance offerings” for its community.

As it stands, Pudgy Penguins holds the 21st position by all-time NFT sales volume across all chains. The collection has over $252 million in lifetime sales and in the past 30 days, it had $4.2 million in sales, up 102% during that time frame, according to CryptoSlam data.

Of the 8,888 NFTs, only 566, or 6.4%, are listed for sale, according to its website. The floor price, or lowest selling price, for a Pudgy Penguin is about 4.18 ether, or $7,700, at current prices. The most expensive one is currently listed for 900 ether, or $1.6 million, even though it was sold two years ago for 0.009 eth, or about $16.

Going forward, Pudgy Penguins, and other big NFT collections, could serve as leaders paving the way for larger intellectual property moves in the subsector. These projects aren’t just making money through sales, but through investors, too. And with that, there could be a snowball effect (no pun intended).

This week in web3

Tensor’s on a climb to the top of the Solana NFT marketplace totem pole (TC+)

Just two months ago, NFT trading platform Tensor raised $3 million in a seed round. Fast-forward to today and it is close to regaining its position as the biggest Solana-based NFT marketplace, based on market share. The platform launched a private beta in June 2022 and opened to the public the following month. In March, it had over 30,000 monthly active users, and by April, its MAU was up about 317% to over 125,000, co-founder Ilja Moisejevs said.

Crypto needs a global view to build better regulatory models (TC+)

The crypto industry is increasingly worried that U.S. regulators are clamping down too hard on the space. Predictably, that’s making companies in the space look outwards to regions that have clearer guidelines in place, and it seems there are lessons the industry, and regulators around the world, can learn from looking beyond their borders.

Former FTX CEO Sam Bankman-Fried seeks to dismiss most US charges against him

Former FTX CEO and founder Sam Bankman-Fried has filed a pretrial motion to dismiss 10 out of 13 charges against him, according to court documents. In Monday’s filing, Bankman-Fried’s attorneys from law firm Cohen & Gresser seek to dismiss the conspiracy to commit wire fraud and bank fraud charges. The lawyers also seek to dismiss a few other charges, including bribery and political contribution charges. However, his attorneys did not appeal three charges: conspiracy to commit securities fraud, securities fraud and conspiracy to commit money laundering.

Arbitrum co-founder sees DAO’s resolution to voter drama as a ‘testament to decentralization’ (TC+)

It’s been a little over a month since the Arbitrum Foundation drama, where the foundation transferred funds from Arbitrum DAO without the community’s approval, sparking an uproar. But if you ask Steven Goldfeder, CEO and co-founder of Offchain Labs, that blunder was just one of the early steps on the journey to decentralization.

Mastercard, PayPal and Robinhood dive deeper into crypto as industry shows ‘promise’ (TC+)

As the crypto market works its way through a downturn, more incoming money and users could help it weather the storm. But right now, it’s sometimes challenging for the layperson to get into crypto. Understanding gas fees and wallets isn’t intuitive, and the perceived miasma of complication that currently surrounds the space is no help, either. To help foster user adoption and the resulting capital inflow, web3 needs smoother on- and off-ramps to make it easier to buy into and interact with blockchains. Trusted providers with existing mainstream audiences are betting they can help fill that gap.

Investors cheer as Coinbase beats Q1 expectations (TC+)

Coinbase reported its Q1 2023 financial results, handily beating expectations. In the first three months of the year, the U.S. cryptocurrency exchange generated net revenues of $736 million, a $79 million net loss and adjusted EBITDA of $284 million. Analysts had expected a far slimmer $655 million in revenue and a larger loss from the company in the first quarter. In after-hours trading, shares of Coinbase are up a little more than 7%. Certainly, Coinbase’s results are a welcome dataset for both crypto bulls and investors in the company alike.

The latest pod

This week we have a bonus episode from a fireside chat Jacquelyn did with Nadya Tolokonnikova, the creator of the protest art collective Pussy Riot, at NFT NYC in April.

Tolokonnikova was sentenced to two years of imprisonment in 2012 after being found guilty of “hooliganism motivated by religious hatred,” but was released early under amnesty.

Fast-forward to 2023 and Tolokonnikova has continued to use the Pussy Riot name to fight in favor of women and LGBTQ people’s rights and against Russia’s control under President Vladimir Putin. As of March, Tolokonnikova was added to Russia’s most wanted criminals list.

Tolokonnikova has also spoken before the U.S. Congress, British Parliament, European Parliament and has appeared on TV shows like House of Cards.

We dove into a deep conversation surrounding Tolokonnikova’s mission, how she uses NFTs as a form of activism and how she got into the space.

We also discussed:

- How others can use NFTs for activism

- Future visions for NFT utility

- Advice for projects in the space

ICYMI: On last week’s episode, Jacquelyn interviewed Jake Chervinsky, the chief policy officer at Blockchain Association, a nonprofit organization focused on promoting “pro-innovation” policy for the digital asset world. He is also a board member of the DeFi education fund and advisor for a web3 seed stage fund Variant.

Prior to his work with the Blockchain association, Chervinsky began his attorney career in private practice with a focus on anti-money laundering and anti-corruption compliance and investigations, financial services litigation and government enforcement defense. He spends a lot of time in DC, testifying at hearings to help provide clarity on the crypto industry in hopes to guide it in the right direction.

We talked about all things regulation, from how Chervinsky views the current regulatory landscape to whether or not we’re in a “crackdown” era, as people call it.

We also discussed:

- Regulators’ views changing

- American crypto companies

- Are cryptocurrencies commodities or securities

- Stablecoin legislation

- Future legal frameworks and guidelines

Subscribe to Chain Reaction on Apple Podcasts, Spotify or your favorite pod platform to keep up with the latest episodes, and please leave us a review if you like what you hear!

Follow the money

- Crypto media platform Blockworks raised $12 million at a $135 million valuation

- Decentralized crypto wallet provider Odsy Network raised $7.5 million

- Webb Protocol raised $7 million to expand cross-chain privacy

- Multichain NFT ecosystem-focused Artifact Labs raised $3.25 million

- Siphon Lab, a DeFi platform on Sui Network, raised $1.2 million in a seed round

This list was compiled with information from Messari as well as TechCrunch’s own reporting.

To get a roundup of TechCrunch’s biggest and most important crypto stories delivered to your inbox every Thursday at 12 p.m. PT, subscribe here.

Follow me on Twitter @Jacqmelinek for breaking crypto news, memes and more.

Pudgy Penguin’s $9M seed round could point to a growing NFT industry by Jacquelyn Melinek originally published on TechCrunch

Project Starline is the coolest work call you’ll ever take

I don’t have any images from my Project Starline experience. Google had a strict “no photos, no videos” policy in place. No colleagues, either. Just me in a dark meeting room on the Shoreline Amphitheater grounds in Mountain View. You walk in and sit down in front of a table. In front of you is what looks like a big, flat screen TV.

A lip below the screen extends out in an arc, incased in a speaker. There are three camera modules on the screen’s edges — on the top and flanking both sides. They look a bit like Kinects in that way all modern stereoscopic cameras seem to.

The all-too-brief seven-minute session is effectively an interview. A soft, blurry figure walks into frame and sits down, as the image’s focus sharpens. It appears to be both a privacy setting and a chance for the system to calibrate its subject. One of the key differences between this Project Starline prototype and the one Google showed off late last year is a dramatic reduction in hardware.

The team has reduced the number of cameras down from “several” to a few and dramatically decreased the overall size of the system down from something resembling one of those diner booths. The trick here is developing a real-time 3D model of a person with far fewer camera angles. That’s where AI and ML step in, filling in the gaps in data, not entirely dissimilarly from the way the Pixel approximates backgrounds with tools like Magic Erase — albeit with a three-dimensional render.

After my interview subject — a member of the Project Starline team — appears, it takes a bit of time for the eyes and brain to adjust. It’s a convincing hologram — especial for one being rendered in real time, with roughly the same sort of lag you would experience on a plain old two-dimensional Zoom call.

You’ll notice something a bit…off. Humans tend to be the most difficult. We’ve evolved over millennia to identify the slightest deviation from the norm. I throw off the term “twitching” to describe the subtle movement on parts of the subject’s skin. He — more accurately — calls them “artifacts.” These are little instances the system didn’t quite nail, likely due to limitations on the data being collected by the on-board sensors. This includes portions with an absence in visual information, which appear as though the artist has run short on paint.

A lot of your own personal comfort level comes down to adjusting to this new presentation of digital information. Generally speaking, when most of us talk to another person, we don’t spend the entire conversation fixated on their corporeal form. You focus on the words and, if you’re attuned to such things, the subtle physical cues we drop along the way. Presumably, the more you use the system, the less calibration your brain requires.

Quoting from a Google research publication on the technology:

Our system achieves key 3D audiovisual cues (stereopsis, motion parallax, and spatialized audio) and enables the full range of communication cues (eye contact, hand gestures, and body language), yet does not require special glasses or body-worn microphones/headphones. The system consists of a head-tracked autostereoscopic display, high-resolution 3D capture and rendering subsystems, and network transmission using compressed color and depth video streams. Other contributions include a novel image-based geometry fusion algorithm, free-space dereverberation, and talker localization.

Effectively, Project Starline is gathering information and presenting it in such a way that creates the perception of depth (stereopsis), using the two spaced-out biological cameras in our skulls. Spatial audio, meanwhile, serves a similar function for sound, calibrating the speakers to give the impression that the speaker’s voice is coming out of their virtual mouth.

Google has been testing this specific prototype version for some time now with WeWork, T-Mobile and Salesforce — presumable the sorts of big corporate clients that would be interested in such a thing. The company says much of the feedback revolves around how true to life the experience is versus things like Google Meet, Zoom and Webex — platforms that saved our collective butts during the pandemic, but still have a good deal of limitations.

You’ve likely heard people complain — or complained yourself — about the things we lost as we moved from the in-person meeting to virtual. It’s an objectively true sentiment. Obviously Project Starline is still very much a virtual experience, but can probably trick your brain into believing otherwise. For the sake of a workplace meeting, that’s frankly probably more than enough.

There’s no timeline here and no pricing. Google referred to it as a “technology project” during our meeting. Presumably the ideal outcome for all of the time and money spent on such a project is a saleable product. The eventual size and likely pricing will almost certainly be out of reach for most of us. I could see a more modular version of the camera system that clips onto the side of a TV or computer doing well.

For most people in most situations, it’s overkill in its current form, but it’s easy to see how Google could well be pointing to the future of teleconferencing. It certainly beats your bosses making you take calls in an unfinished metaverse.

Project Starline is the coolest work call you’ll ever take by Brian Heater originally published on TechCrunch

https://techcrunch.com/2023/05/11/project-starline-is-the-coolest-work-call-youll-ever-take/

Keepon, carry on

I’m back in the South Bay this week, banging away at an introduction in the hotel lobby a few minutes before our crew heads to Shoreline for Google I/O. There’s a guy behind in a business suit and sockless loafers, taking a loud business meeting on his AirPods. It’s good to be home.

I’ve got a handful of meetings lined up with startups and VCs and then a quiet, robot-free day and a half in Santa Cruz for my birthday. Knowing I was going to be focused on this developer even all day, I made sure to line some stuff up for the week. Turns out I lined up too much stuff – which is good news for all of you.

In addition to the usual roundup and job openings, I’ve got two great interviews for you.

Two weeks back, I posted about a bit of digging around I was doing in the old MIT pages – specifically around the Leg Lab. It included this sentence, “Also, just scrolling through that list of students and faculty: Gill Pratt, Jerry Pratt, Joanna Bryson, Hugh Herr, Jonathan Hurst, among others. Boy howdy.”

After that edition of Actuator dropped, Bryson noted on Twitter,

Boy howdy?

I never worked on the robots, but I liked the lab culture / vibe & meetings. Marc, Gill & Hugh were all welcoming & supportive (I never got time to visit Hugh’s version though). My own supervisor (Lynn Stein) didn’t really do labs or teams.

I discovered subsequent to publishing that I may well be the last person on Earth saying, “Boy Howdy” who has never served as an editor at Creem Magazine (call me). A day or two before, a gen-Z colleague was also entirely baffled by the phrase. It’s one in a growing list of archaic slang terms that have slowly ingratiated themselves into my vernacular, and boy howdy, am I going to keep using it.

As far as the second (and substantially more relevant) bit of the tweet, Bryson might be the one person on my initial list who I had never actually interacted with at any point. Naturally, I asked if she’d be interested in chatting. As she noted her tweet, she didn’t work directly with the robots themselves, but her work has plenty of overlap with that world.

Bryson currently serves as the Professor of Ethics and Technology at the Hertie School in Berlin. Prior to that, she taught at the the University of Bath and served as a research fellow at Oxford and the University of Nottingham. Much of her work focuses on artificial and natural intelligence, including ethics and governance in AI.

Given all talk around generative AI, the recent open letter and Geoffrey Hinton’s recent exit from Google, you couldn’t ask for better timing. Below is an excerpt from the conversation we recently had during Bryson’s office hours.

Q&A Joanna Bryson

Image Credits: Hertie School

You must be busy with all of this generative AI news bubbling up.

I think generative AI is only part of why I’ve been especially busy. I was super, super busy from 2015 to 2020. That was when everybody was writing their policy. I also was working part-time because my partner had a job in New Jersey. That was a long way from Bath. So, I cut back to half time and was paid 30%. Because I was available, and people were like, “we need to figure out our policy,” I was getting flown everywhere. I was hardly ever at home. It seems like it’s been more busy, but I don’t know how much of that is because of [generative AI].

Part of the reason I’m going to this much detail is that for a lot of people, this is on their radar for the first time for some reason. They’re really wrapped up in the language thing. Don’t forget, in 2017, I did a language thing and people were freaked out by that too, and was there racism and sexism in the word embeddings? What people are calling “generative AI” – the ChatGPT stuff – the language part on that is not that different. All the technology is not all that different. It’s about looking at a lot of exemplars and then figuring out, given a start, what things are most likely coming next. That’s very related to the word embeddings, which is for one word, but those are basically the puzzle pieces that are now getting stuff together by other programs.

I write about tech for a living, so I was aware of a lot of the ethical conversations that were happening early. But I don’t think the most people were. That’s a big difference. All of the sudden your aunt is calling you to ask about AI.

I’ve been doing this since the 80s, and every so often, something would happen. I remember when the web happened, and also when it won chess, when it won Go. Every so often that happens. When you’re in those moments, it’s like, “oh my gosh, now people finally get AI.” We’ve known about it since the 30s, but now we keep having these moments. Everyone was like, “oh my god, nobody could have anticipated this progress and Go.” Miles Brundage showed during his PhD that it’s actually linear. We could have predicted within the month when it was going to pass human competence.

Is there any sense in which this hype bubble feels different from previous?

Hertie School was one of the first places to come out with policy around generative AI. At the beginning of term, I said this new technology is going to come in, in the middle of the semester. We’ll get through it, but it’s going to be different at the end than it was at the beginning. In a way, it’s been more invisible than that. I think probably the students are using it extensively, but it isn’t as disruptive as people think, so far. […] I think part of the issue with technological change is everyone thinks that leads to unemployment and it doesn’t.

The people who have been made most unemployed are everybody in journalism — and not by replacing them but rather by stealing their revenue source, which was advertising. It’s a little flippant, but actually there is this whole thing about telephone operators. They were replaced by simple switches. That was the period when it switched to being more women in college than men, and it was because they were mostly women’s jobs. We got the more menial jobs that were being automated. […]

This is James Bessen’s research. Basically what happens is you bring in a technology that makes it easier to do some task, and then you wind up hiring more people for that task, because they’re each more valuable. Bank tellers were one of the early examples that people talked about, but this has been true in weaving and everything else. Then you get this increase in hiring and then you finally satiate. At some point, there’s enough cloth, there’s enough financial services, and then any further automation does a gradual decline in the number of people employed in that sector. But it’s not an overnight thing like people think.

You mention these conversations you were having years ago around setting guidelines. Were the ethical concerns and challenges the same as now? Or have they shifted over time?

There’s two ways to answer that question: what were the real ethical concerns they knew they had? If a government is flying you out, what are they concerned about? Maybe losing economic status, maybe losing domestic face, maybe losing security. Although, a lot of the time people think of AI as the goose that laid the golden egg. They think cyber and crypto are the security, when they’re totally interdependent. They’re not the same thing, but they rely on each other.

It drove me nuts when people said, “Oh, we have to rewrite the AI because nobody had been thinking about this.” But that’s exactly how I conceived of AI for decades, when I was giving all of these people advice. I get that bias matters, but it was like if you only talked about water and didn’t worry about electricity and food. Yes, you need water, but you need electricity and food, too. People decided, “Ethics is important and what is ethics? It’s bias.” Bias is a subset of it.

What’s the electricity and what’s the food here?

One is employment and another is security. A lot of people are seeing more how their jobs are going to change this time, and they’re afraid. They shouldn’t be afraid of that so much because of the AI — which is probably going to make our jobs more interesting — but because of climate change and the kinds of economic threats we’re under. This stuff will be used as an excuse. When do people get laid off? They get laid off when the economy is bad, and technology is just an excuse there. Climate change is the ultimate challenge. The digital governance crisis is a thing, and we’re still worrying about if democracy is sustainable in a context where people have so much influence from other countries. We still have those questions, but I feel like we’re getting on top of them. We have to get on top of them as soon as possible. I think that AI and a well-governed digital ecosystem help us solve problems faster.

I’m sure you know Geoffrey Hinton. Are you sympathetic with his recent decision to quit Google?

I don’t want to criticize Geoff Hinton. He’s a friend and an absolute genius. I don’t think all the reasons for his move are public. I don’t think it’s entirely about policy, why he would make this decision. But at the same time, I really appreciate that he realizes that now is a good time to try to help people. There are a bunch of people in machine learning who are super geniuses. The best of the best are going into that. I was just talking to this very smart colleague, and we were saying that 2012 paper by Hinton et al. was the biggest deal in deep learning. He’s just a super genius. But it doesn’t matter how smart you are — we’re not going to get omniscience.

It’s about who has done the hard work and understood economic consequences. Hinton needs to sit down as I did. I went to a policy school and attended all of the seminars. It was like, “Oh, it’s really nice, the new professor keeps showing up,” but I had to learn. You have to take the time. You don’t just walk into a field and dismiss everything about it. Physicists used to do that, and now machine learning people are doing that. They add noise that may add some insight, but there are centuries of work in political science and how to govern. There’s a lot of data from the last 50 years that these guys could be looking at, instead of just guessing.

There are a lot of people who are sending up alarms now.

So, I’m very suspicious about that too. On the one hand, a bunch of us noticed there were weird things. I got into AI ethics as a PhD student at MIT, just because people walked up to me and said things that sounded completely crazy to me. I was working on a robot that didn’t work at all, and they’d say, “It would be unethical to unplug that.” There were a lot of working robots around, but they didn’t look like a person. The one that looked like a person, they thought they had an obligation to.

I asked them why, and they said, “We learned from feminism that the most unlikely things can turn out to be people.” This is motors and wires. I had multiple people say that. It’s hard to derail me. I was a programmer trying not to fail out of MIT. But after it happened enough times, I thought, this is really weird. I’d better write a paper about it, because if I think it’s weird and I’m at MIT, it must be weird. This was something not enough people were talking about, this over-identification with AI. There’s something weird going on. I had a few papers I’d put out every four years, and finally, after the first two didn’t get read, the third one I called “Robots Should be Slaves,” and then people read it. Now all of a sudden I was an AI expert.

There was that recent open letter about AI. If pausing advancements won’t work, is there anything short-term that can be done?

There are two fundamental things. One is, we need to get back to adequately investing in government, so that the government can afford expertise. I grew up in the ’60s and ’70s, when the tax rate was 50% and people didn’t have to lock their doors. Most people say the ’90s [were] okay, so going back to Clinton-level tax rates, which we were freaked out by at the time. Given how much more efficient we are, we can probably get by with that. People have to pay their taxes and cooperate with the government. Because this was one of the last places where America was globally dominant, we’ve allowed it to be under-regulated. Regulation is about coordination. These guys are realizing you need to coordinate, and they’re like “stop everything, we need to coordinate.” There are a lot of people who know how to coordinate. There are basic things like product law. If we just set enough enforcement in the digital sector, then we would be okay. The AI act in the EU is like the most boring thing ever, but it’s so important, because they’re saying we noticed that digital products are products and it’s particularly important to enforcement when you have a system that’s automatically making decisions that affect human lives.

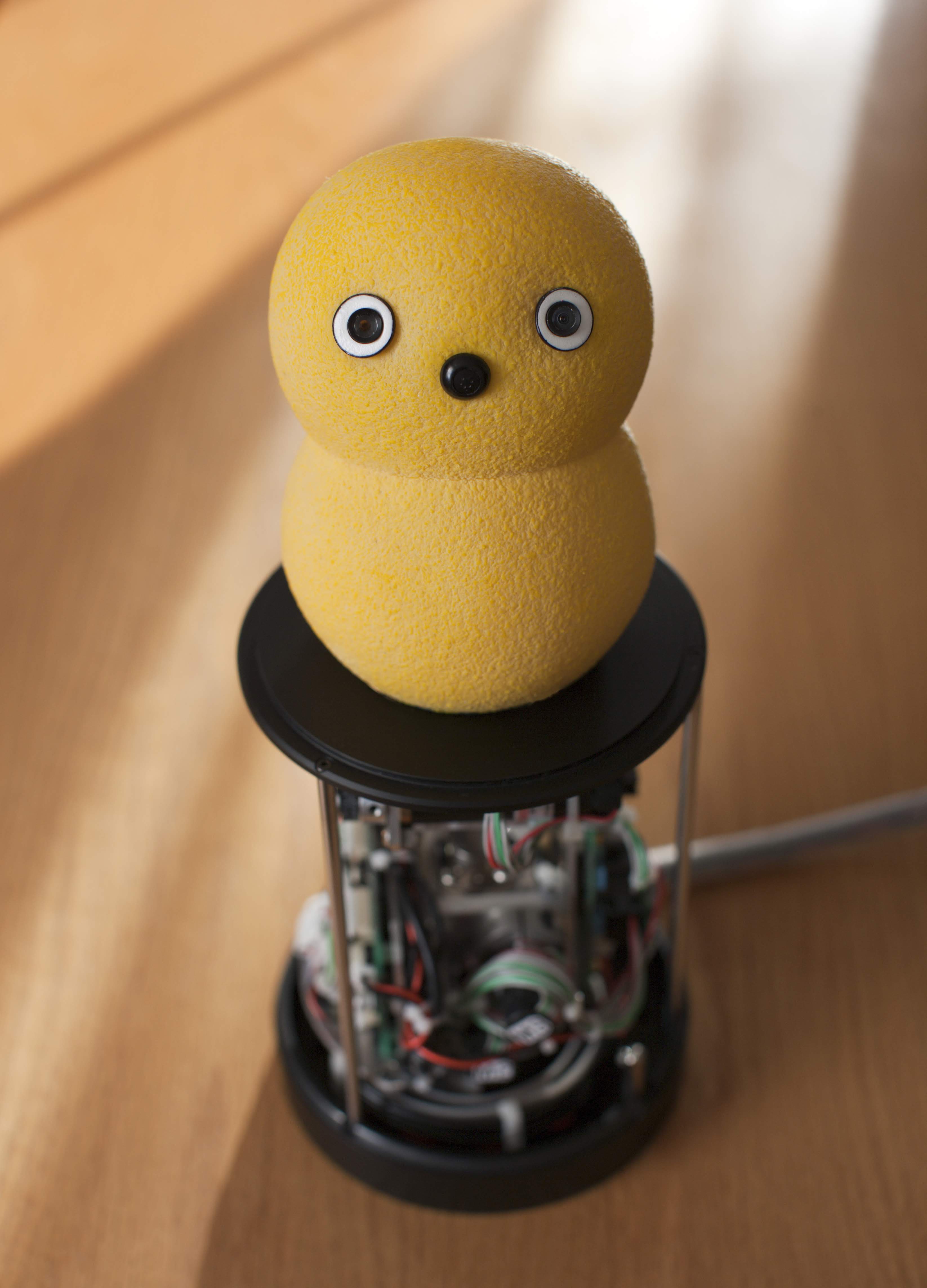

Image Credits: BeatBots LLC / Hideki Kozima / Marek Michalowski

Keepon groovin’

It’s an entirely unremarkable video in a number of ways. A small, yellow robot – two tennis balls formed into an unfinished snowman. Its face is boiled down to near abstraction: two widely spaced eyes stretched above a black button nose. The background is a dead gray, the kind they use to upholster cubicles.

“I Turn My Camera On: It’s the third track on Spoon’s fifth album, Gimme Fiction, released two years prior – practically 10 months to the day after YouTube went live. It’s the Austin-based indie band’s stripped down take on Prince-style funk – an perfect little number that could get anyone dancing, be it human or robot. For just over three-and-a-half minutes, Keepon grooves in a hypnotic rhythmic bouncing.

It was the perfect video for the 2007 internet, and the shiny new video site, roughly half a year after being acquired by Google for $1.65 billion. The original upload is still live, having racked up 3.6 million views over its lifetime.

A significantly higher budget follow up commissioned by Wired did quite well the following year, with 2.1 million views under its belt. This time, Keepon’s dance moves enticed passersby on the streets of Tokyo, with Spoon members making silent cameos throughout.

In 2013, the robot’s makers released a $40 commercial version of the research robot under the name My Keepon. A year later, the internet trail runs cold. Beatbots, the company behind the consumer model, posted a few more robots and then silence. I know all of this because I found myself down this very specific rabbit hole the other week. I will tell you that, as of the writing of this, you can still pick up a secondhand model for cheap on eBay – something I’ve been extremely tempted to do for a few weeks now.

I had spoken with cofounder Marek Michalowski a handful of times during my PCMag and Engadget days, but we hadn’t talked since the Keepon salad days. Surely, he must still be doing interesting things in robotics. The short answer is: yes. Coincidentally, in light of last week’s Google-heavy edition of Actuator, it turns out he’s currently working as a product manager at Alphabet X.

I didn’t realize it when I was writing last week’s issue, but his story turns out to be a great little microcosm of what’s been happening under the Alphabet umbrella since the whole robot startup shopping spree didn’t go as planned. Here’s the whole Keepon arc in his words.

Q&A with Marek Michalowski

Let’s start with Keepon’s origin story.

I was working on my PhD in human robot interaction at Carnegie Mellon. I was interested in this idea of rhythmic synchrony and social interaction, something that social psychologists were discovering 50 years ago in video recorded interactions of people in normal situations. They were drawing out these charts of every little micro movement and change in direction and accent in the speech and finding that there are these rhythms that are in sync within a particular person — but then also between people. The frequency of nodding and gesturing in a smooth interaction ends up being something like a dance. The other side of it is that when those rhythms are kind of unhealthy or out of sync, that that might be indicative of some problem in the interaction.

You were looking at how we can use robots to study social interaction, or how robots can interact with people in a more natural way?

Psychologists have observed something happening we don’t really understand — the mechanisms. Their robots can both be a tool for us to experiment and better understand those those social rhythmic phenomena. And also in the engineering problem of building better interactive robots, those kinds of rhythmic capabilities might be an important part of that. There’s both the science question that could be answered with the help of robots, but also the engineering problem of making better robots that would benefit from an answer to that question.

The more you know about the science, the more you’re able to put that into a robot.

Into the engineering. Basically, that was high level interest. I was trying to figure out what’s a good robotic medium for testing that. During that PhD, I was doing sponsored research trips to Japan, and I met this gentleman named Hideki Kozima, who had been a former colleague of one of one of my mentors, Brian Scassellati. They had been at MIT together working on the Cog and Kismet projects. I visited Dr. Kozima, who had just recently designed and built the first versions of Keepon. He had originally been designing humanoid robots, and also had psychology research interests that he was pursuing through those robots. He had been setting up some interactions between this humanoid and children, and he noticed this was not a good foundation for kind of naturalistic, comfortable social interactions. They’re focusing on the moving parts and the complexity.

Keepon was the first robot I recall seeing with potential applications for Autism treatment. I’ve been reading a bit on ASD recently, and one of the indicators specialists look for is a lack of sustained eye contact and an inability to maintain the rhythm of conversation. With the other robot, the issue was that the kids were focused on the visible moving parts, instead of the yes.

That’s right. With Keepon, the whole mechanism is hidden away, and it’s designed to really draw attention to those eyes, which are cameras. The nose is a microphone, and the use case here was for a researcher or therapists to be able to essentially puppeteer this robot, from a distance in the next room. Over the long term, they could observe how different children are engaging with this toy, and how those relationships develop over time.

There were two Spoon videos. The first was “I Turn My Camera On.”

I sent it to some friends, and they were like, “this is hilarious. You should put it on YouTube. YouTube was new. This was this was I think, March 2007. I actually wrote to the band’s management, and said, “I’m doing this research. I used your song in this video. Is it okay if I put it up on YouTube?” The manager wrote back, like, “oh, you know, let me let me check with [Britt Daniel]. They wrote back, “nobody ever asks, thanks for asking. Go ahead and do it.”

It was the wild west back then.

It’s amazing that that video is, is still there snd still racking up views, but with a week, it was on the front page of YouTube. I think it was a link from Boing Boing, and from there, we had a lot of incoming interest from Wired Magazine. They set set up the subsequent video that we did with withe band in Tokyo. On the basis of those kinds of 15 minutes of fame, there was a lot of there was inbound interest from other researchers at various institutions and universities around the world who were asking, “Hey, can I get one of these robots and do some research with it?” There was also some interest from toy companies, so Dr. Kozima and I started Beatbots as a way of making some more of these research robots, and then to license the Keepon IP.

[…]I was looking to relocate myself to San Francisco, and I had learned about this company called Bot and Dolly — I think I think it was from a little half page ad in Wired Magazine. They were using robots in entertainment in a very different way, which is on film sets to hold cameras and lights and do the motion control.

They did effects for Gravity.

Yes, exactly. They were actually in the midst of doing that project. That was a really exciting and compelling use of these robots that were designed for automotive manufacturing. I reached out to them, and their studio was this amazing place filled with robots. They let me rent room in the corner to do Beatbots stuff, and then co-invest in a machine shop that they wanted to build. I set up shop there, and over the next couple of years I became really interested in the kinds of things they were doing. At the same time, we were doing a lot of these projects, which we were talking with various toy companies about. Those are on the Beatbots website. […]You can do a lot when you’re building one research robot. You can craft it by hand and money is no object. You can buy kind of the best motors and so forth. It’s a very different thing to put something in a toy store and the retail price is roughly four times the like bill of materials.

Image Credits: BeatBots LLC / Hideki Kozima / Marek Michalowski

The more you scale, the cheaper the components get, but it’s incredible hard to hit a $40 price point with a first-gen hardware project.

With mass commercial products, that’s the challenge of how can you reduce the number of motors and what tricks can you can you do to make any given degree of freedom serve multiple purposes. We learned a lot, but also ran into physics and economics challenges.

[…]I needed to decide, do I want to push on the boundaries of robotics by making these things as inexpensively as possible? Or would I rather be in a place where you can use the best available tools and resources? That was a question I faced, but it was sort of answered for me the opportunities that were coming up with the things that Bot and Dolly was doing.

Google acquired Bot and Dolly with eight or so other robotics companies, including Boston Dynamics.

I took that up. That’s when the Beatbots thing was put on ice. I’ve been working on Google robotics efforts for — I guess it’s coming on nine years now. It’s been really exciting. I should say that Dr. Kozima is still working on Keepon in these in these research contexts. He’s a professor at Tohoku University.

News

Image Credits: 6 River Systems (opens in a new window) under a license.

Hands down the biggest robotics news of this week arrived at the end of last week. After announced a massive 20% cut to its 11,600-person staff, Shopify announced that it was selling of its Shopify Logistics division to Flexport. Soon after, word got out that it had also sold of 6 River Systems to Ocado, a U.K. licenser of grocery technology.

I happened to speak to 6 River Systems cofounder Jerome Dubois about how the initial Shopify/6 River deal was different that Amazon’s Kiva purchase. Specifically, the startup made its new owner agree to continue selling the technology to third parties, rather than monopolizing it for its own 3PL needs. Hopefully the Ocada deal plays out similarly.

“We are delighted to welcome new colleagues to the Ocado family. 6 River Systems brings exciting new IP and possibilities to the wider Ocado technology estate, as well as valuable commercial and R&D expertise in non-grocery retail segments,” Ocado CEO James Matthews said in a release. “Chuck robots are currently deployed in over 100 warehouses worldwide, with more than 70 customers. We’re looking forward to supporting 6 River Systems to build on these and new relationships in the years to come.”

Image Credits: Locus Robotics

On a very related note, DHL this week announced that it will deploy another 5,000 Locus robotics systems in its warehouses. The two companies have been working together for a bit, and the logistics giant is clearly quite pleased with how things have been going. DHL has been fairly forward thinking warehouse automation, including the first major purchase of Boston Dynamics’ trucking unloading robot, Stretch.

Locus remains the biggest player in the space, while managing to remain independent, unlike its larges competitor, 6 River. CEO Rick Faulk recently told me that the company is planning an immanent IPO, once market forces calm down.

A sorter machine from AMP Robotics.

Recycling robotics heavyweight AMP Robotics this weekend announced a new investment from Microsoft’s Climate Fund, pushing its $91 million Series C up to $99 million. There has always been buzz around the role of robotics could/should have in addressing climate change. The Denver-based firm is one of the startups tackling the issue head-on. It’s also a prime example of the “dirty” part of the three robotic Ds.

“The capital is helping us scale our operations, including deploying technology solutions to retrofit existing recycling infrastructure and expanding new infrastructure based on our application of AI-powered automation,” founder and CEO Matanya Horowitz told TechCrunch this week.

Image Credits: Amazon

Business insider has the scoop on an upcoming version of Amazon’s home robot, Astro. We’ve known for a while that the company is really banking on the product’s success. It seems like a longshot, given the checkered history of companies attempting to break into the home robotics market. iRobot is the obvious exception. Not much update on that deal, but last we hard about a month or so ago is that regulatory concerns have a decent shot at sidelining the whole thing.

Astro is an interesting product that is currently hampered by pricing and an unconvincing feature set. It’s going to take a lot more than what’s currently on offer to change the tide in home robots. We do know that Amazon is currently investing a ton into catching up with the likes of Chat GPT and Google on the generative AI front. Certainly, a marriage of the two makes sense. It’s easy to see how conversational AI could go a long way in a product like Astro, whose speech capabilities are currently limited.

Robot Jobs for Human People

Agility Robotics (20+ Roles)

ANYbotics (20+ Roles)

AWL Automation (29 Roles)

Bear Robotics (4 Roles)

Canvas Construction (1 Role)

Dexterity (34 Roles)

Formic (8 Roles)

Keybotic (2 Roles)

Neubility (20 Roles)

OTTO Motors (23 Roles)

Prime Robotics (4 Roles)

Sanctuary AI (13 Roles)

Viam (4 Roles)

Woven by Toyota (3 Roles)

Image Credits: Bryce Durbin/TechCrunch

Keepon, carry on by Brian Heater originally published on TechCrunch

TuSimple continues its slide into the ether with new delisting warning

It wasn’t that long ago that self-driving trucks company TuSimple was on a tear — raising funds, locking in partnerships and hitting some development milestones that seemed to push to it to the front of the AV pack.

A string of internal scandals and executive upheaval that resulted in the ousting of co-founder Xiaodi Huo, an SEC investigation and a restructuring in December has left the former darling of the nascent self-driving trucks sector in shambles. And now its on the cusp of being delisted from the Nasdaq exchange.

The company reported Thursday that it received a delisting notice from the Nasdaq for failing to file its quarterly report on time. TuSimple hasn’t filed a quarterly report for the fourth quarter or full-year results. It last reported earnings for the quarter ended September 30.

Shares fell nearly 30% in trading Thursday to $0.80.

Nasdaq will suspend trading of TuSimple shares on May 15 unless it files an appeal. TuSimple said it intends to appeal and will ask for an extended stay of the suspension of trading until its hearing with Nasdaq.

The company also said its board has approved appointment of UHY LLP at its new independent registered public accounting firm for the fiscal year ended December 31, 2022.

When TuSimple launched in 2015 it was one of the first autonomous trucking startups to emerge in what has become a small, yet bustling industry that now includes Aurora, Kodiak and Waymo. TuSimple’s founding team and its earliest backers Sina and Composite Capital are from China, but the company positioned itself as an U.S. company with its global headquarters in San Diego and later an engineering center and truck depot in Tucson and a facility in Texas to support its autonomous trips

The company scooped up capital from a diverse consortium of strategic investors, including Volkswagen AG’s heavy-truck business The Traton Group, Navistar, Goodyear, and freight company U.S. Xpress. And in March 2021 filed for an IPO, eschewing the financial SPAC trend.

Despite its upward trajectory there were problems lingering in the background. Its S-1 flagged a regulatory risk due to its Chinese funding source. The Committee on Foreign Investment in the United States would eventually conclude its review and TuSimple would work to offload the Chinese piece of its business.

TuSimple shares would reach its peak in July 2021 when the price hit $62.58. Over the next six months, its share price dropped by half. But the real fall would begin in late December 2021. Shares have fallen 97% since then.

In 2022, internal drama would lead to the ousting and rehiring of CEO Cheng Lu, who returned after Hou who had held CEO, president and CTO posts, was fired by the board. Hou, who co-founded TuSimple in 2015 with Mo Chen, was also removed from his position as chairman of the board and member of the board’s government security committee.

Hou’s firing came a day after The Wall Street Journal published a report citing unnamed sources that TuSimple was facing concurrent probes by the Federal Bureau of Investigation, Securities and Exchange Commission and Committee on Foreign Investment in the U.S. (CFIUS). The investigation was focused on TuSimple’s relationship with Hydron, a hydrogen-powered trucking company led by TuSimple co-founder Chen and backed by Chinese investors.

Hou has disputed the reason for the firing, posting on his LinkedIn account that he resigned from TuSimple’s board due to disagreements over Lu’s compensation package, as well as the company’s shift in focus from Level 4 autonomy to Level 2 autonomy.

TuSimple also lost Navistar as a partner in late December when the two companies ended a plan to co-develop self-driving trucks. A few weeks after the deal fell apart, TuSimple announced plans to lay off 25% of its total workforce, or about 350 workers, as part of a broader restructuring plan designed to keep the company running.

TuSimple continues its slide into the ether with new delisting warning by Kirsten Korosec originally published on TechCrunch

VanMoof updates its last-gen e-bikes with simplified X4 and S4 models

Dutch e-bike maker VanMoof is refreshing its entry level lineup with a pair of new bikes available in some very springy colors. The X4 and S4, out this month, retain the frame and philosophy of VanMoof’s previous X3 and S3 e-bikes while trimming some of the complexities that made those models notably high-tech but less reliable for some riders.

Gone is the Matrix display, the impressive screen on the bike’s top tube that displayed critical info like speed and battery levels. The X4 and S4 swap that display, which was well-loved though arguably excessive for this price tier, with a spartan phone mount (for built-in charging, you’ll need to move up to the luxury S5 and A5 models). These models have also swapped out the previous 3-speed gear shifting system for a simpler 2-speed shifter while retaining the speed boost button, most of the anti-theft technology with the exception of Apple integration and the general design and vibe.

Fans of last-gen’s X3 and S3 models may be skeptical about some of the changes, but VanMoof could win them over with one big improvement: color options. VanMoof’s always built some of the most stylish bikes around and it’s high time that potential buyers have more color choices. Prospective buyers now decide between evergreen, purple fog, sunbeam yellow and a foam green, with some fun contrasting bungee cord options that make those hues really pop. The two handsome green options are personally making me question buying a relatively unexciting sky blue X3 on sale last year.

Last year, VanMoof debuted a pair of higher-end e-bikes known as the S5 and A5, adding in a handlebar LED display, USB charging and a new motor for $3998 (at the time of writing; VanMoof has a bad habit of tinkering with its pricing way too often). The company also previously announced an even higher-end, high concept, high speed bike called the VanMoof V, though who that’s really for or how much it will cost remains to be seen.

Hopefully the X4 and S4’s substantial design changes along with VanMoof’s $2,498 price point for these new bikes helps the company’s operation become more sustainable. VanMoof was apparently in trouble toward the end of last year, nearly running out of cash and scrambling for an infusion of investment. While some reviewers, myself included (full disclosure: I bought an X3 last year) haven’t run into many problems, VanMoof needs to clean up its track record of reliability and quality control issues and offer a customer support experience that matches the thoughtful elegance of some of the best e-bikes on the market.

That crisis appears to have been averted, for now at least, and hopefully VanMoof is able to chart a smoother course and stay on the map for the foreseeable future.

VanMoof updates its last-gen e-bikes with simplified X4 and S4 models by Taylor Hatmaker originally published on TechCrunch

Hear how MinIO built a unicorn in object storage on top of Kubernetes and open source

Everything needs a home, and Garima Kapoor co-founded MinIO to build an enterprise-grade, open source object storage solution. The pitch sounds amazing: simple, high performance, and a native Kubernetes integration. I’m excited to announce I’m interviewing Kapoor and MinIO investor Mark Rostick of Intel Capital on MinIO’s growth and how the company manages serious competition.

This TechCrunch Live event is free to attend, and I hope you can make it. Register here.

It might not be the buzziest areas to grow a company, yet MinIO found a niche selling object storage, while competing directly with Amazon S3. Perhaps the open source component helps drive interest from its target developer market looking for an alternative from the cloud giant. It’s never easy competing with the likes of Amazon, yet MinIO has been able to find success.

Garima Kapoor co-founded MinIO in 2014 and has since grown the company to a billion-dollar valuation. Along the way, Kapoor raised $126.30 million from venture capital, including from Mark Rostick of Intel Capital.

I have a lot of questions about MinIO:

- Why did it get into a business competing directly with Amazon?

- What role did open source have in building a developer audience?

- How did cloud-native technologies contribute to your success?

- What did Mark and Intel Capital see in this company that made them think they could succeed in a crowded and mature market?

And you can ask questions too! Each week on TechCrunch Live, registered Hopin attendees can submit questions, and I’ll do my best to ask as many as possible.

TechCrunch Live is our weekly event series featuring top founders and investors. The show records live, most Wednesdays at 12:00 p.m. PDT. It’s free to register and attend. Watch past episodes on our YouTube channel and subscribe to the podcast here.

Hear how MinIO built a unicorn in object storage on top of Kubernetes and open source by Ron Miller originally published on TechCrunch

Pitch Deck Teardown: Fibery’s $5.2M Series A deck

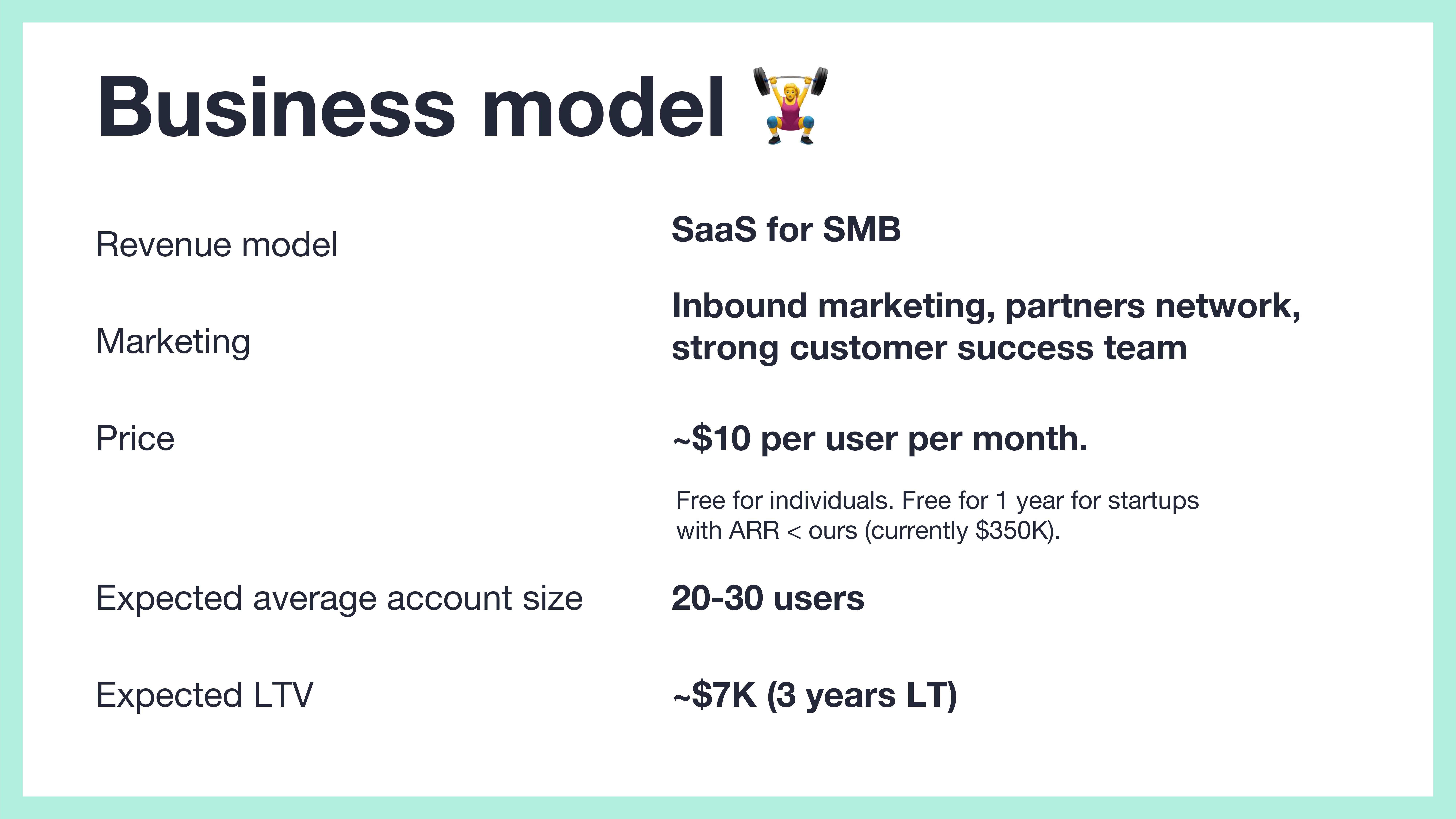

I love a good, clear business model, and Fibery’s is a pretty decent example here; it shows the high-level pricing model (multiaccount SaaS), the target market, and how it is pricing itself. It shows an expected LTV that’s pretty conservative (the $7,000 LTV using these numbers assumes an average account size of 20 or so).

Inbound marketing and customer success are both great when the customers are coming to you, and “partners network” is a little fuzzy. But it’s missing the customer acquisition cost. I’ll say a bit more about this below when I talk about things that could be improved but overall it’s a solid slide.

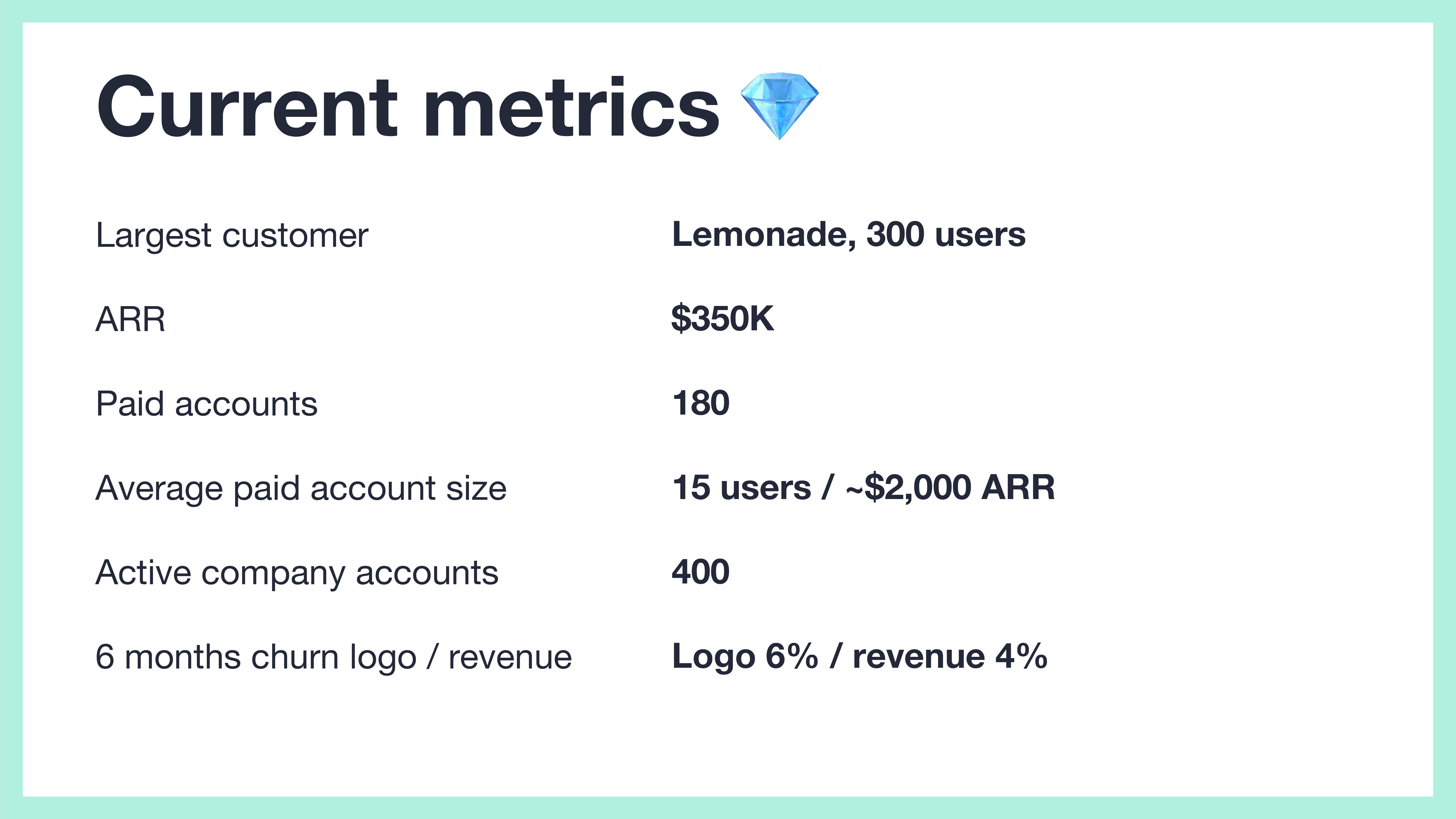

Metrics!

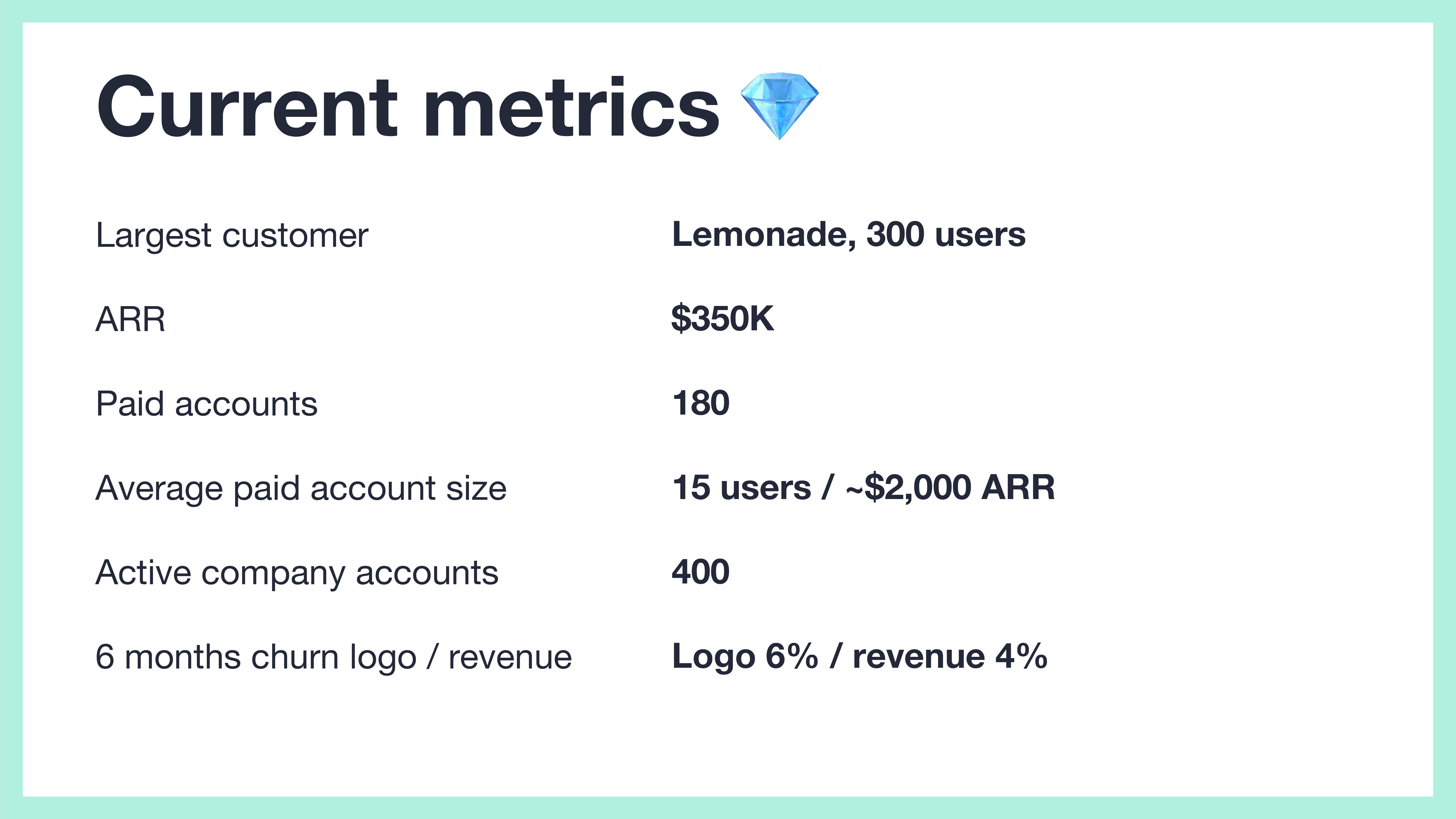

[Slide 12] Show me that traction. Image Credits: Fibery

A good traction slide forgives all sins, and this is a great snapshot of where the business is right now. The fact that the logo churn (i.e., churn of number of customers) is higher than the revenue churn indicates that the company is able to retain its higher-value customers. That’s good. The rest of the stats look good, too, for a company at this stage. Clear, simple, concise.

Still, I wish Fibery had shown some of these metrics as graphs. Having $350,000 annual recurring revenue is impressive, but if it had been stagnant for the past six months, that’d ring some warning bells. Investors don’t invest in snapshots, but in trends, so you may as well show them.

The other quirk is that the numbers are inconsistent. On slide 11, it says that the expected average account size is 20-30, but on this snapshot slide, it shows that Fibery currently has 15 paid users per account. Not saying anything about how it expects to grow that number makes me suspicious.

Bonus win: Great ask slide

[Slide 15] Yessssss. Image Credits: Fibery

The slide is labeled “plans,” but this is what I usually refer to as the “ask” slide.

Fibery is a really interesting startup that’s aiming to be the single source of truth and work for product companies. The Cyprus-based company raised a $5.2 million series A recently, and I wanted to take a closer look at what makes the startup tick.

We’re always looking for more unique pitch decks to tear down, so if you want to submit your own, here’s how you can do that.

Slides in this deck

Fibery raised its round with a 15-slide deck and shared the whole thing with TechCrunch+ unedited. That’ll give us a good idea of how the company landed its $5.2 million investment.

These are the slides:

- Cover slide

- Problem slide

- Solution slide

- Market-size slide

- Competitor slide

- Competitive analysis slide

- Product slide

- “Building blocks” slide

- Feedback/customer validation slide

- Go-to market strategy slide

- Business model slide

- Traction slide

- Milestones to date slide

- Team slide

- The ask slide

Four things to love

Click through the full deck and you’re treated to a lot of white space, simplicity and clarity. The company made a few unusual design choices, which really work for me, and the story hangs together well. But there are a few things that I particularly enjoyed.

A solid competitive analysis

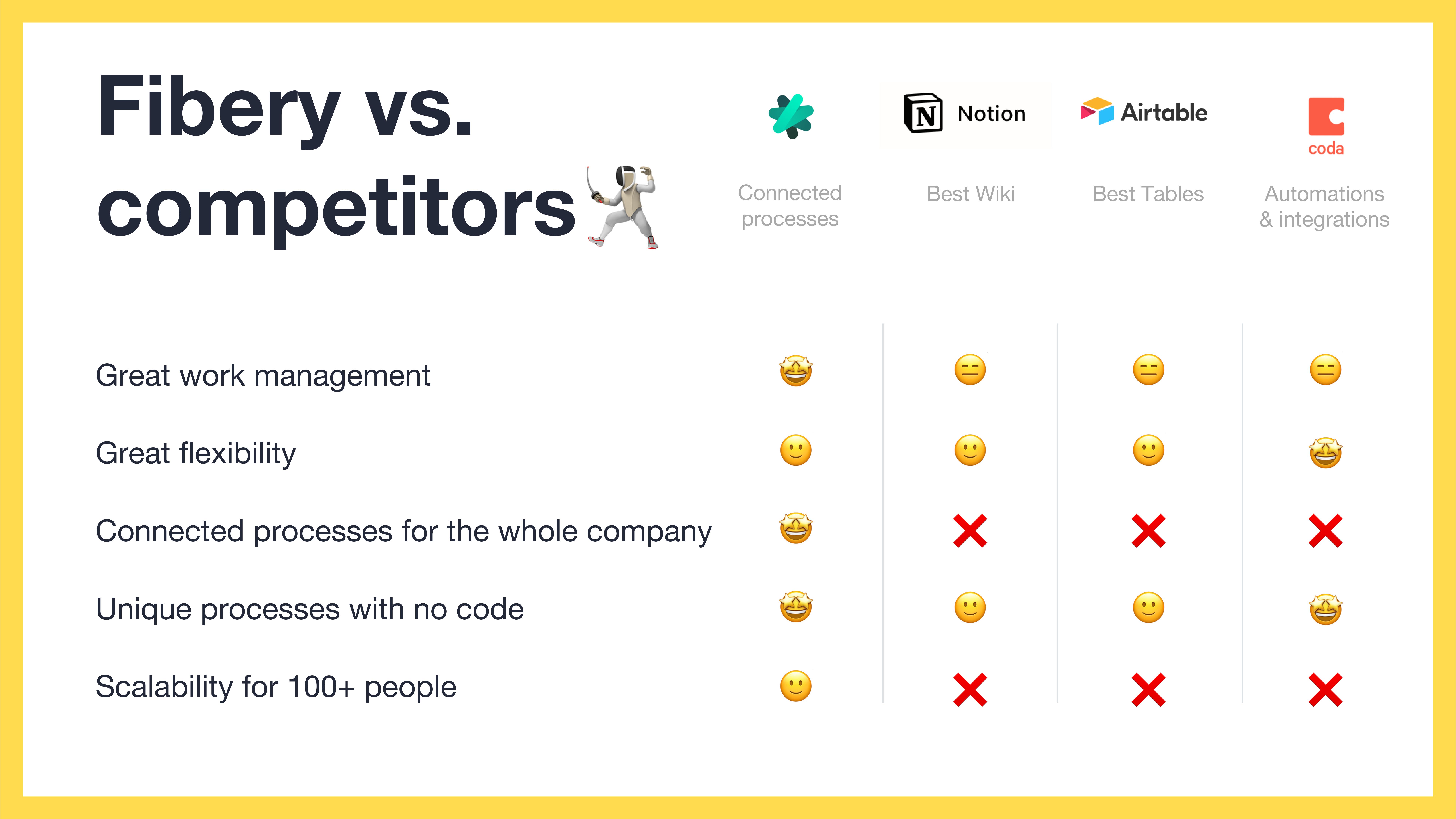

The company has two competition slides:

[Slide 5] Competition, part 1. Image Credits: Fibery

[Slide 6] Competition, part 2. Image Credits: Fibery

Competition slides are often an afterthought, either because startups underestimate their competitors, or because they claim that they don’t have any. Fibery does a really good job on both fronts.

On slide 5, the company breaks down both the existing players in this space in a really elegant way, and shows that there’s a big market worth going after. It even manages to identify some of the strengths in its competitors, which is always a nice touch — especially if the solution does something slightly different or is able to offer an additional set of features or an approach to the problem that unlocks a broader or different customer base. Investors who might be interested in this space will know Jira, Trello, Asana and Zendesk; Fibery is shrewdly positioning itself opposite a few multibillion dollar companies. Smooth.

On slide 6, there’s a slightly deeper dive into the other no-code tools Fibery considers to be competitors. Again, the company is choosing to praise its competitors for their strengths (“Best Tables” for Airtable and “Best Wiki” for Notion).

This helps give a deeper understanding of what the company perceives is its positioning in the market.

Great business model clarity

[Slide 11] Business model. Image Credits: Fibery

I love a good, clear business model, and Fibery’s is a pretty decent example here; it shows the high-level pricing model (multiaccount SaaS), the target market, and how it is pricing itself. It shows an expected LTV that’s pretty conservative (the $7,000 LTV using these numbers assumes an average account size of 20 or so).

Inbound marketing and customer success are both great when the customers are coming to you, and “partners network” is a little fuzzy. But it’s missing the customer acquisition cost. I’ll say a bit more about this below when I talk about things that could be improved but overall it’s a solid slide.

Metrics!

[Slide 12] Show me that traction. Image Credits: Fibery

A good traction slide forgives all sins, and this is a great snapshot of where the business is right now. The fact that the logo churn (i.e., churn of number of customers) is higher than the revenue churn indicates that the company is able to retain its higher-value customers. That’s good. The rest of the stats look good, too, for a company at this stage. Clear, simple, concise.

Still, I wish Fibery had shown some of these metrics as graphs. Having $350,000 annual recurring revenue is impressive, but if it had been stagnant for the past six months, that’d ring some warning bells. Investors don’t invest in snapshots, but in trends, so you may as well show them.

The other quirk is that the numbers are inconsistent. On slide 11, it says that the expected average account size is 20-30, but on this snapshot slide, it shows that Fibery currently has 15 paid users per account. Not saying anything about how it expects to grow that number makes me suspicious.

Bonus win: Great ask slide

[Slide 15] Yessssss. Image Credits: Fibery

The slide is labeled “plans,” but this is what I usually refer to as the “ask” slide. Lots of founders get this wrong, so it’s a delight to see it done so well. Fibery doesn’t explain what a “strong marketing” team is, and “setup and improve channels” doesn’t mean much. Having clear MRR and ARR goals for EOY 2023 and 2024 is great, and having a clear launch target for a new version of the platform is even better.

In the rest of this teardown, I’ll take a look at three things Fibery could have improved or done differently, along with its full pitch deck!

Pitch Deck Teardown: Fibery’s $5.2M Series A deck by Haje Jan Kamps originally published on TechCrunch

https://techcrunch.com/2023/05/11/sample-series-a-pitch-deck-fibery/

Meta is killing the Messenger app for Apple Watch on May 31

Meta is killing its Messenger for Apple Watch app on May 31, the company confirmed to TechCrunch on Thursday. Apple Watch users who have the app installed are being notified that “after May 31st, Messenger won’t be available as an Apple Watch app, but you can still get Messenger notifications on your watch.”

Although you will still be able to receive notifications for new messages, you won’t be able to respond to them and will instead have to use your iPhone app to do so.

“People can still receive Messenger notifications on their Apple Watch when paired, but starting at the beginning of June they will no longer be able to respond from their watch,” a Meta spokesperson told TechCrunch in an email. “But they can continue using Messenger on their iPhone, desktop and the web, where we are working to make their personal messages end-to-end encrypted.”

The Messenger app for Apple Watch offers a lot of convenience for its users, as you can still receive messages on your watch even when your iPhone is not paired with the wearable. Users are already taking to social media to voice their displeasure about the upcoming move.

NOT HAPPY @Apple @messenger #applewatch #wtf pic.twitter.com/B81aK6EUf3

— Amanda Nova (@M_anda_M) May 9, 2023

It’s unknown why Meta has decided to discontinue its Messenger app for watchOS. Messenger joins a list of other platforms that have sunsetted their Apple Watch over the past several years, including Slack, Uber and Twitter.

The change comes as Meta’s other messaging app, WhatsApp, is working on a native WearOS app. Earlier this week, WhatsApp launched a beta version of its app compatible with Google’s smartwatch platform. People using WhatsApp beta for Android version 2.23.10.10 will be able to link their Wear OS-based smartwatch. Users will be able to check their messages on the smartwatch, and all chats will be end-to-end encrypted. Google said that support for calls and starting conversations will be available when the app is available to all users.

The move also comes as Meta has been introducing some changes around Messenger. A few months ago, the company began testing the ability for users to access their Messenger inbox within the Facebook app. Back in 2016, Facebook removed messaging capabilities from its mobile web application to push people to the Messenger app, in a move that angered many users. Now, the company is testing a reversal of this decision.

Meta is killing the Messenger app for Apple Watch on May 31 by Aisha Malik originally published on TechCrunch

https://techcrunch.com/2023/05/11/meta-killing-messenger-app-apple-watch-may-31/